TL;DR

- AI agents in production cannot be left to run unattended. Software provides monitoring, but people add judgment, context, and improvement.

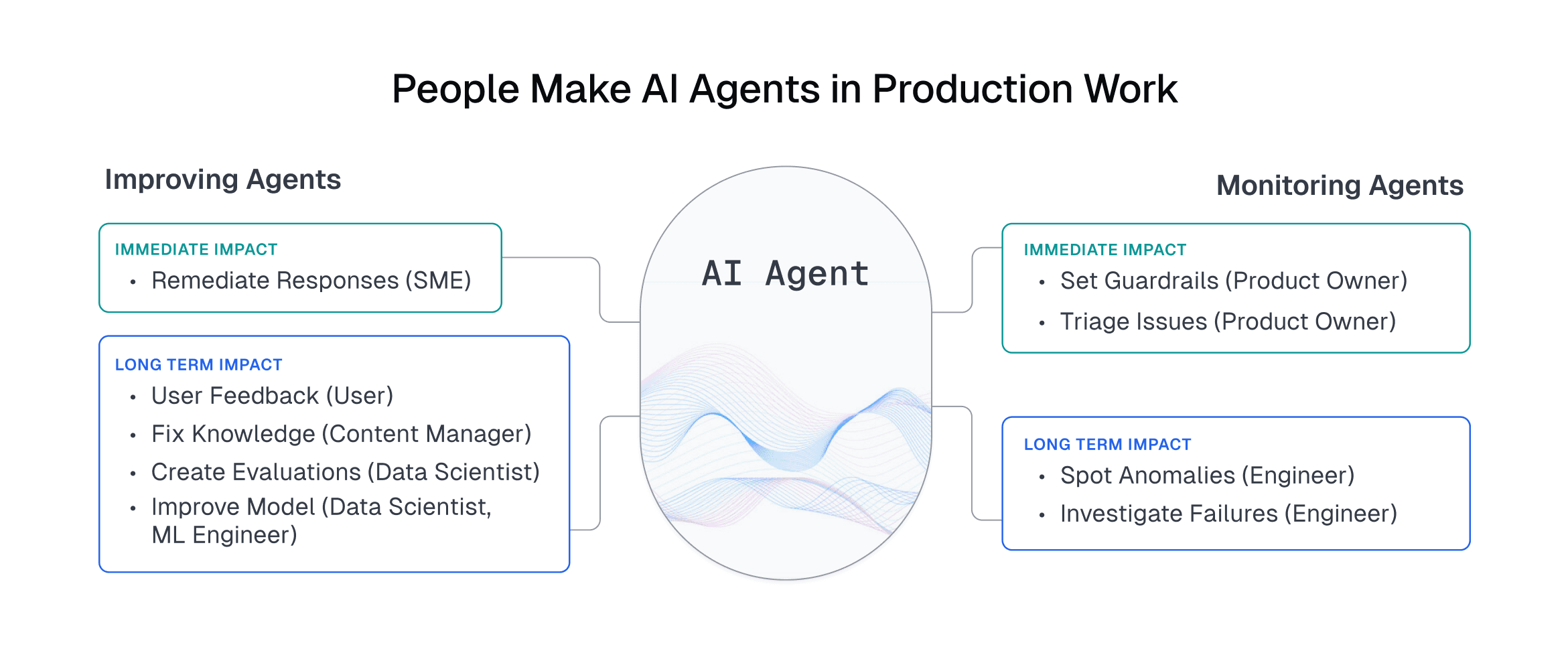

- Human roles fall into two categories: monitoring (guardrails, prioritizing issues, root cause analysis) and improving (remediation, knowledge base fixes, evaluations, model fine-tuning).

- Oversight needs differ by agent type. Some require high human involvement (compliance, onboarding), while others need moderate (support, employee-facing) or light (workflow automation).

Intro

AI agents in production only succeed when people are part of the system. In a live environment, agents are not just generating outputs. They are making decisions, automating actions, and interacting with customers and employees. That power comes with risk. A single mistake can damage trust, increase costs, or create compliance issues.

The solution is not to hold agents back but to manage them with the right level of software and human oversight. Monitoring systems track performance, costs, and guardrails. People add context, provide expert answers, and feed back improvements that make agents stronger over time. With this balance, AI agents in production become safer, more effective, and more adaptable.

Why People Are Essential for AI Agents in Production

AI agents adapt and make decisions, which makes them powerful but also unpredictable. Software tools such as dashboards, logging, and alerts provide visibility, but they are not enough on their own. Models themselves are static artifacts. Frontier LLMs reflect the state of the world at the time they were released. As reality changes, their performance drifts downward, even on tasks they once handled well. A recent MIT study found that this inability to adapt over time is already one of the biggest inhibitors of ROI from AI deployments today. For these reasons, people remain essential:

- Judgment. Humans handle the ambiguity and edge cases that software cannot, such as interpreting regulations, resolving customer escalations, or approving sensitive actions.

- Context. People bring domain knowledge and situational awareness that enables better decisions than automation alone.

- Improvement. Human feedback corrects mistakes and refines behavior, creating the loop that allows agents to get better over time.

Together, software and people form a reliable operating system for AI agents. Software provides visibility and scale, while people ensure agents remain aligned with business, ethical, and regulatory standards.

How People Support AI Agents in Production: Roles and Responsibilities

Human involvement in AI agents can be grouped into two broad categories: monitoring and improving. Within each, distinct roles are needed to keep agents reliable and aligned with business needs. Monitoring is typically led by technical employees such as engineers and product owners, while improving often depends on nontechnical teammates like SMEs and content managers who can directly correct and control agent behavior.

Monitoring AI Agents

Monitoring human roles fall into two categories: those that provide operational monitoring in production and those that perform root cause analysis when things go wrong.

Operational Monitoring

Focuses on keeping agents safe and aligned in day-to-day production.

- Defining adaptive guardrails (Product Owner). Product owners set the rules that determine what an agent can or cannot do, adjusting them as policies, regulations, or customer needs evolve. This is critical because acceptable behavior shifts over time, and static thresholds are not enough.

- Acting on prioritized issues (Product Owner). While software can detect and rank issues by severity, the product owner decides which ones require immediate action, escalation, or observation. This matters because only humans can weigh technical urgency against customer impact and business risk.

Root Cause Analysis

Focuses on understanding failures and preventing them from recurring.

- Interpreting anomalies (Engineer). Automated systems can flag spikes in cost, drift in behavior, or unexpected responses, but engineers interpret whether those anomalies are harmless or business-critical. This matters because context determines whether an alert is noise or a real incident.

- Investigating failures (Engineer). When an agent gets something wrong, engineers trace the chain of reasoning, inputs, and workflow triggers to understand why it failed. This is important because root causes are often tied to subtle business logic or data gaps that monitoring software cannot explain.

Improving AI Agents

Improvement is multi-faceted. Some actions strengthen agents gradually, while others deliver faster impact.

Immediate Improvement

Focuses on fixing errors right away.

- Remediation with expert input (Subject-Matter Expert). When agents cannot handle a query, SMEs provide the correct answer. Unlike approaches that depend on retraining or fine-tuning, remediation updates the system instantly so future occurrences are handled correctly. It delivers fast reliability gains while also creating high-quality examples that can later be used for training.

Longer-Term Improvements

Focuses on continuous refinement and sustained accuracy.

- User feedback (Users). End users provide ratings, corrections, and preferences that fine-tune responses. This is important because user feedback reflects real-world expectations that no training dataset can fully anticipate.

- Fixing knowledge base issues (Content Manager). Software can surface knowledge gaps or outdated content, but knowledge managers must update and correct the underlying information. This is essential because accuracy depends on human expertise validating what is right.

- Creating evaluations (Data Scientist). Data scientists design structured test cases and benchmarks that measure whether agents are learning and improving. This ensures changes can be validated before rollout and monitored after deployment.

- Improving the model (Data Scientist, ML Engineer). Prompt engineering, retraining, and fine-tuning adapt the model to new requirements and data. Since frontier LLMs are static artifacts, they require continuous adjustment to remain effective. Prompt engineering enables faster adjustments, while retraining and fine-tuning provide deeper, long-term gains. Together these methods strengthen the foundation for all agent behavior.

Oversight Varies by Agent Type

The balance of monitoring and improving roles is not the same for every agent. Different types of AI agents carry different risks, so the intensity of oversight must match what is at stake.

Broadly, agent types fall into three oversight levels:

High Oversight → require both close monitoring in production and active improvement, since mistakes carry significant business, compliance, or reputational risk.

Moderate Oversight → benefit from structured monitoring and selective improvement, with humans refining quality and handling exceptions.

Light Oversight → primarily rely on operational monitoring, with humans stepping in only for exceptions or anomalies.

| Oversight Level | Agent Type | Human Role |

|---|---|---|

| High | Compliance | Compliance officers sign off on actions to meet regulatory and audit standards. |

| Customer onboarding | Sales leaders or product owners validate offers, guidance, and activation flows. | |

| Moderate | Customer support | Managers handle escalations for complex or sensitive customer issues. |

| Employee assist | SMEs provide feedback to refine accuracy and usefulness. | |

| Employee support | HR and compliance leads review sensitive HR or policy-related interactions. | |

| Light | Workflow automation | Engineers and ops analysts monitor exceptions to prevent cascading errors. |

Key takeaway: Different agents need different mixes of monitoring and improving. Engineering leaders should align oversight intensity with agent type, risk profile, and environment instead of treating all agents the same.

Practical Steps for Engineering Leaders

Engineering leaders can design oversight into their systems from the start:

- Define oversight roles. Assign product owners, analysts, engineers, SMEs, knowledge managers, ML engineers, and data scientists to specific responsibilities.

- Deploy monitoring systems. Use dashboards, audit logs, and alerts to surface issues for the right people to review.

- Establish escalation paths. Define when and how humans intervene.

- Integrate structured feedback. Capture and reuse human corrections systematically.

- Scale responsibly. Automate routine checks but keep humans in control of sensitive areas.

AI agents in production succeed only when people are built into the loop. The challenge for engineering leaders is not choosing between automation and oversight. It is designing the right balance for each agent type so systems remain safe, effective, and adaptable. Crucially, AI improvements should not fall solely on AI engineers. The most effective long-term deployments establish structured processes where technical employees monitor and troubleshoot, while nontechnical teammates such as Subject Matter Experts and Product Managers provide domain expertise and corrections that keep agents aligned with business needs.

The next step is to map your current AI agents against the three oversight levels and assign ownership. This simple exercise clarifies where human roles are essential and prevents gaps that can erode trust once agents are live.