Surprise! The Office-Home Dataset (cited by 600+ papers) contains hundreds of erroneous label and data issues found using Cleanlab Studio — an automated solution to find and fix data issues using AI. This CSA (Cleanlab Studio Audit) is our way to inform the community about issues in popular datasets.

Image Classification with the Office-Home Dataset

Here we consider the Office-Home Dataset which has been cited by over 600 research papers in recent years. This dataset contains labeled images of 65 types of items commonly found around the home/office like lamps, computers, and alarm clocks. We discovered hundreds of issues in this famous computer vision dataset just by quickly running it through Cleanlab Studio.

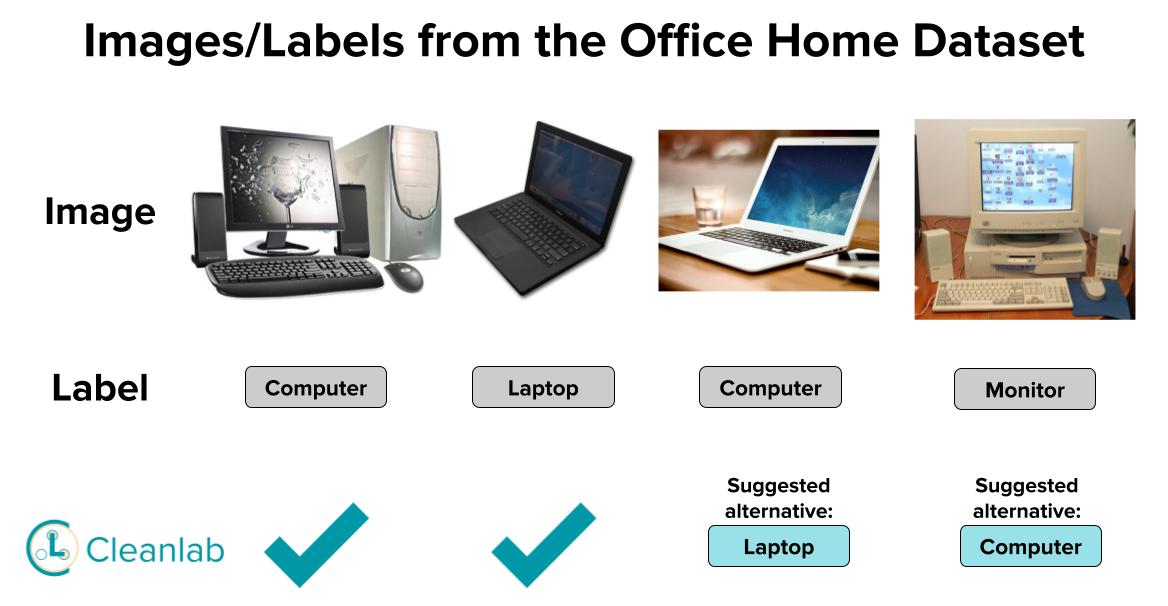

Above the first two images are correctly labeled examples (randomly chosen from the dataset) while the latter two are images that Cleanlab Studio automatically identified as mislabeled (also suggesting a more appropriate label to use instead).

According to the author, the Office-Home dataset was “…collected using a python web-crawler…then filtered to ensure that the desired object was in the picture”. This common method for dataset curation often produces incorrect image-label pairs. Let’s look at more problems Cleanlab Studio detected in this dataset.

Mislabeled Examples

Cleanlab Studio found hundreds of examples that are incorrectly labeled. Here we show groups of computers ←→ laptops and chairs ←→ couches. As above, the first two images are correctly labeled examples (randomly chosen from the dataset) while the following two are images that Studio automatically identified as mislabeled. Clearly the distinction between these pairs of classes should be more clearly defined!

Ambiguous Examples

Cleanlab Studio also found many images with issues where multiple labels are appropriate (even though each image is just given one label in the original dataset). We see here a few examples that could be labeled with either of the depicted labels. But in the original dataset, these images are just given the blue label only.

Outliers

Sometimes images aren’t just mislabeled, they should be removed from the dataset entirely as they do not belong to any of the classes of interest. In this dataset, Cleanlab Studio automatically detects many images that are outliers and cannot be appropriately labeled as any of the classes.

Fix data with Cleanlab Studio

Clearly, these data errors detected by the AI in Cleanlab Studio could be detrimental to your modeling and analytics efforts. It’s important to know about such errors in your data and correct them, in order to train the best models and draw the most accurate conclusions.

To find & fix such issues in almost any dataset (text, image, table/CSV/Excel, etc), just run it through Cleanlab Studio. Try this no-code Data-Centric AI tool for free!

Want to get featured?

If you’ve found interesting issues in any dataset, your findings can be featured in future CSAs if you want to share them with the community! Just fill out this form.