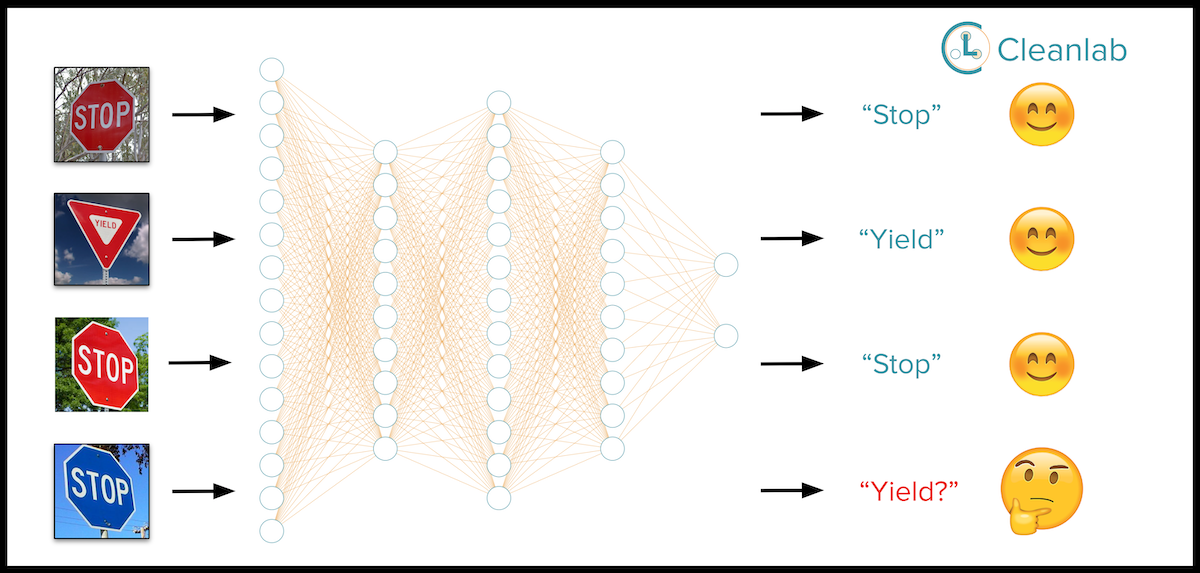

Anyone who has tried training ML models on real-world datasets (not the perfectly curated data we work with in school) has probably dealt with outliers in data. The problem with some Out-of-Distribution (OOD) detection algorithms is they make a big assumption — that a model is equally confident in all classes — most of the time, that assumption is false. For example, a model trained on ImageNet is typically overconfident (predicted probabilities close to 1) for bananas but underconfident (low predicted probabilities close to 0) for the ten different but very similar-looking lizard classes in the dataset.

In this article, we’ll show you a novel and simple adjustment to model predicted probabilities that can improve OOD detection with classifier models trained on real-world data. Our unique approach is rooted in theory and runs in just a couple of lines of code.

Background

Identifying outliers in test data that do not stem from the distribution of the training data is critical for deploying reliable machine learning models. While many special (e.g., generative) models have been proposed for this task of Out-of-Distribution (a.k.a. anomaly/novelty) detection123, these are often specific to particular data types and nontrivial to implement. Instead, simpler methods for OOD detection that use an already-trained classifier on data with class labels have become quite popular45. Methods like KNN distance67 and Mahalanobis Distance34 leverage a trained neural network’s intermediate feature representations to identify OOD examples.

An even simpler approach is to only use the predicted class probabilities output by the trained classifier and quantify their uncertainty as a measure of outlyingness. Two particularly popular OOD methods are Maximum Softmax Probability (MSP)5 or Entropy46. Compared to most other methods, MSP and Entropy need less information from the model and require less computing to identify outliers. Here we introduce a simple improvement to these baseline methods to improve their effectiveness.

Baseline prediction-based OOD detection methods

Consider an image and classifier model where is the model’s predicted probability vector that this image belongs to each class . Based on , one can compute two simple OOD scores for .

Maximum Softmax Probability (MSP) — quantifies how confident the model is in the most likely class it predicts:

Entropy — quantifies how evenly spread the model’s probabilistic predictions are amongst all classes:

These scores have been shown to work surprisingly well for detecting OOD images5, despite the fact that they do not explicitly estimate epistemic uncertainty4.

Simple adjustment to Improve Baseline Methods

The model predicted probabilities are subject to estimation error. Trained models can have a biased propensity to predict specific classes over others, particularly when the classes in the original dataset are imbalanced. To account for these issues, we adjust the predicted probabilities using class confident thresholds14, forming new OOD scores based on the MSP/Entropy of the resulting adjusted predictions.

Calculating Class Confident Thresholds

Letting denote the class label for the i-th example in our training data and denote the probability that this example belongs to class according to our model, we compute a confident threshold vector whose k-th element is defined as:

The confidence thresholds are the average probability of a class predicted by our model amongst the examples labeled as that class. This vector thus represents our model’s propensity to predict a particular class for examples labeled as that class and has been proven to be a natural threshold for determining the reliability of probabilistic predictions14.

Adjusting Model Predicted Probabilities for Noise

For any new example , its predicted probability vector is subsequently adjusted by the class confident threshold as follows:

Here scalar is the largest value in the confident threshold vector (to ensure nonnegative probabilities) and scalar is a normalizing constant (to ensure the probabilities sum to one over the classes):

While the confident thresholds vector is always calculated using the train-predicted probabilities and labels. Any model output predicted probabilities (i.e., for additional test data) could be adjusted using these thresholds.

Computing adjusted OOD scores

Improved OOD scores for are achieved simply by plugging in the adjusted predicted probabilities in the place of into either of the respective MSP/Entropy formulas. This adjusted OOD detection procedure thus remains extremely simple and is easy to implement in practical deployments.

Evaluating OOD Detection Performance

Following standard OOD benchmarking procedures, existing image classification datasets are grouped in pairs where: one dataset is used to train a Swin Transformer8 classifier and considered in-distribution training data, while examples from the second dataset are mixed in with the testing data of the first dataset (at a 50–50 ratio) as out-of-distribution images. Each OOD scoring method is applied to all images in the test set (without knowledge of their source or their labels) to produce a ranking of these images, which we evaluate using the AUROC for how well these scores detect OOD examples.

We consider 2 different OOD detection problems based on popular image classification datasets: CIFAR-106 vs. CIFAR-1006 and MNIST5 vs. FASHION-MNIST7. Our first benchmark relies on the original versions of these datasets, where classes naturally occur in equal proportions.

We also run a second benchmark in which we introduce class imbalance in each training set. Here we create new imbalanced training sets for CIFAR-10, MNIST, and FASHION-MNIST, where in each training set: 6 classes each contain 2% of the total examples and 4 classes each contain 22% of the examples. We also create an imbalanced training set for CIFAR-100 in which 90 classes each have 0.63% of the examples, and 10 classes each have 4.25% of the examples. This allows us to evaluate how well our OOD scores perform in settings where the classes occur in unequal proportions in the labeled training data, as is often the case in real-world applications.

Benchmark Results

Tables 1 and 2 list the AUROC performance achieved by both adjusted and original (non-adjusted) OOD scoring methods for each benchmark setting. For many in-distribution / OOD dataset pairs, there is a clear improvement that results from our proposed adjustment.

Table 1: Performance (AUROC) of Out-of-Distribution detection with original (balanced) datasets (higher is better).

Table 2: Performance (AUROC) of Out-of-Distribution detection with imbalanced datasets (higher is better).

With only a minor adjustment to the predicted probabilities output by a trained classifier, the performance of both Entropy and MSP-based out-of-distribution detection scores is increased.

Reproduce these benchmarks yourself here: ood-detection-benchmarks.

Try improved OOD Detection yourself in 3 lines of code

Implementing this adjusted OOD scoring can easily be done in practice with the cleanlab library. If you already have data and a trained model you can do:

from cleanlab.outlier import OutOfDistribution

ood = OutOfDistribution()

ood.fit(pred_probs=train_pred_probs, labels=train_labels)

ood_scores = ood.score(pred_probs=test_pred_probs)

Scores in cleanlab are normalized to lie in [0,1] with values near 0 indicating examples more likely to be out-of-distribution.

Resources to learn more and run OOD detection on your own data

- 5 min Tutorial to run OOD detection on your own data

- Example of advanced OOD detection code

- Code to reproduce the benchmarks presented here

- cleanlab open-source library

- Cleanlab Studio: no-code data improvement

Join our community of scientists/engineers to ask questions and help build the future of open-source Data-Centric AI: Cleanlab Slack Community

References

Footnotes

-

Yang, J., Zhou, K., Li, Y., and Liu, Z. Generalized out-of-distribution detection: A survey. arXiv:2110.11334. 2021. ↩ ↩2 ↩3

-

Ran, X., Xu, M., Mei, L., Xu Q., and Liu Q. Detecting out-of-distribution samples via variational auto-encoder with reliable uncertainty estimation. Neural Networks. 2022. ↩

-

Cao, S., and Zhang, Z. Deep Hybrid Models for Out-of-Distribution Detection. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2022. ↩ ↩2

-

Kirsch, A., Mukhoti, J., van Amersfoort, J., Torr, P. H. S., and Gal, Y. On pitfalls in OOD detection: Entropy considered harmful. ICML Workshop on Uncertainty and Robustness in Deep Learning. 2021. ↩ ↩2 ↩3 ↩4 ↩5 ↩6

-

Hendrycks, D. and Gimpel, K. A baseline for detecting misclassified and out-of-distribution examples in neural networks. In International Conference on Learning Representations, 2017. ↩ ↩2 ↩3 ↩4

-

Kuan, J., and Mueller, J. Back to the Basics: Revisiting Out-of-Distribution Detection Baselines. ICML Workshop on Principles of Distribution Shift. 2022 ↩ ↩2 ↩3 ↩4

-

Angiulli, F. and Pizzuti, C. Fast outlier detection in high dimensional spaces. In European conference on principles of data mining and knowledge discovery, 2002. ↩ ↩2

-

Lee, K., Lee, K., Lee, H., and Shin, J. A simple unified framework for detecting out-of-distribution samples and adversarial attacks. Advances in neural information processing systems, 31, 2018. ↩