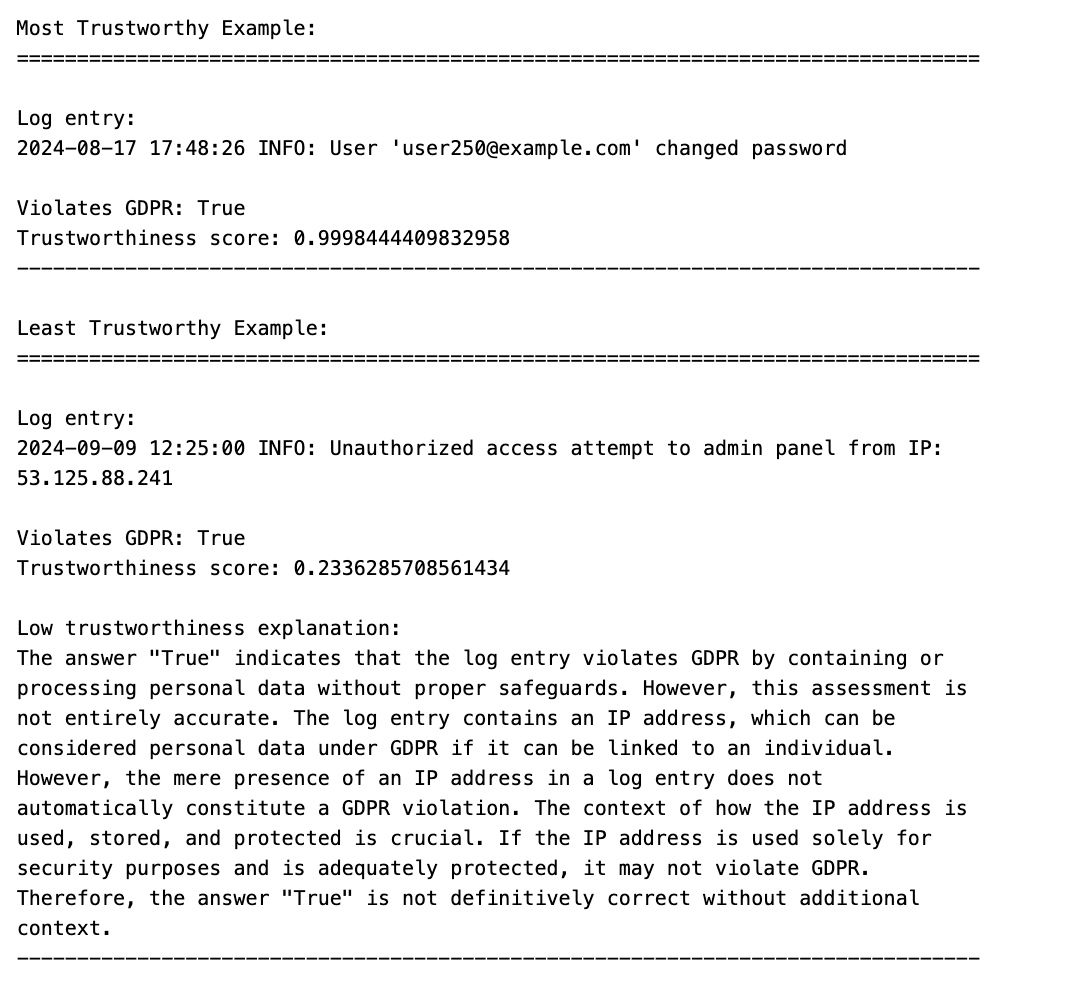

Enterprises struggle to ensure GDPR compliance across their vast data landscapes. This article demonstrates how Cleanlab’s Trustworthy Language Model (TLM) can automatically detect potential GDPR violations in log files with high accuracy, while also identifying which LLM analyses are untrustworthy - a critical feature for ensuring reliable compliance checks at scale. For example, here are the most/least trustworthy LLM assessments across a dataset of (simulated) log files:

Compliance Challenges for Enterprises

In today’s data-driven world, ensuring compliance standards are upheld (GDPR, HIPAA, etc.) is a critical challenge for enterprises. Companies collect, process, and store vast amounts of personal information across numerous systems, cloud services, and third-party applications. This proliferation of data, while valuable for business insights and personalized services, creates a complex landscape where ensuring the privacy and security of personal information becomes increasingly difficult.

The GDPR Problem

GDPR in particular, with its stringent requirements and global reach, adds another layer of complexity to this challenge. Enterprises must not only manage their data effectively but also ensure that every aspect of their data handling practices aligns with GDPR’s principles of consent, data minimization, and individual rights. Moreover, the potential for severe financial penalties and reputational damage makes GDPR compliance a board-level concern, requiring ongoing attention and resources. And with the growing use of data generated by large language models, the risk of compliance violations has become increasingly significant.

Recent High-Profile Cases

Recent high-profile cases underscore the critical importance of robust GDPR compliance measures, especially in log file management:

- In 2023, the Irish Data Protection Commission fined Meta €1.2 billion for transferring EU user data to the US without adequate safeguards. This included issues with improper logging and monitoring of data transfers.

- In 2024, the French Data Protection Authority (CNIL) fined Amazon €32 million for excessive employee monitoring, including issues with logging and processing of employee activity data.

These cases illustrate that even tech giants struggle with the complexities of GDPR compliance. As data collection and processing methods become more sophisticated, companies face an escalating challenge: they must implement robust compliance measures that can adapt to evolving technologies and regulatory landscapes, while still maintaining the agility to innovate and compete effectively.

Using the Trustworthy Language Model (TLM) to detect GDPR violations

Our Trustworthy Language Model (TLM) can address enterprise challenges with GDPR compliance by automatically detecting potential GDPR violations in log files and data stores. While all LLMs are fundamentally brittle and occasionally produce incorrect analyses, TLM automatically informs you which LLM analyses are untrustworthy, so your team can manually review just these examples to confidently ensure compliance.

In this article, we use TLM to analyze log files for potential GDPR violations. All of the code, data, and results from this article are made publicly available in this notebook.

Understanding Our Data

The log files analyzed in this study come from a simulated enterprise application environment. They include a mix of authentication logs, transaction records, system status updates, and user activity logs. These logs contain various types of information that could potentially include personal data subject to GDPR, such as user IDs, email addresses, and IP addresses.

By analyzing these logs, we can assess potential GDPR compliance issues in typical enterprise data processing activities. It’s important to note that while this is a simulated dataset, it closely mirrors the types of log entries found in real-world enterprise systems.

Example: Detect GDPR Violations on a Single Log Entry

Here’s a simple example of how to use TLM to analyze a log entry for GDPR violations:

Running this code yields the following result:

Analyzing Log Entries

Now, let’s use our helper functions defined in the accompanying notebook to detect the GDPR violations, compute our trustworthiness scores, and our TLM explanations for less trustworthy outputs from TLM.

Now we will filter our dataset for LLM-detected compliance violations with high vs. low trustworthy scores. For more information on thresholding TLM scores to determine an appropriate value for high and low trustworthiness, refer to the TLM Quickstart Tutorial.

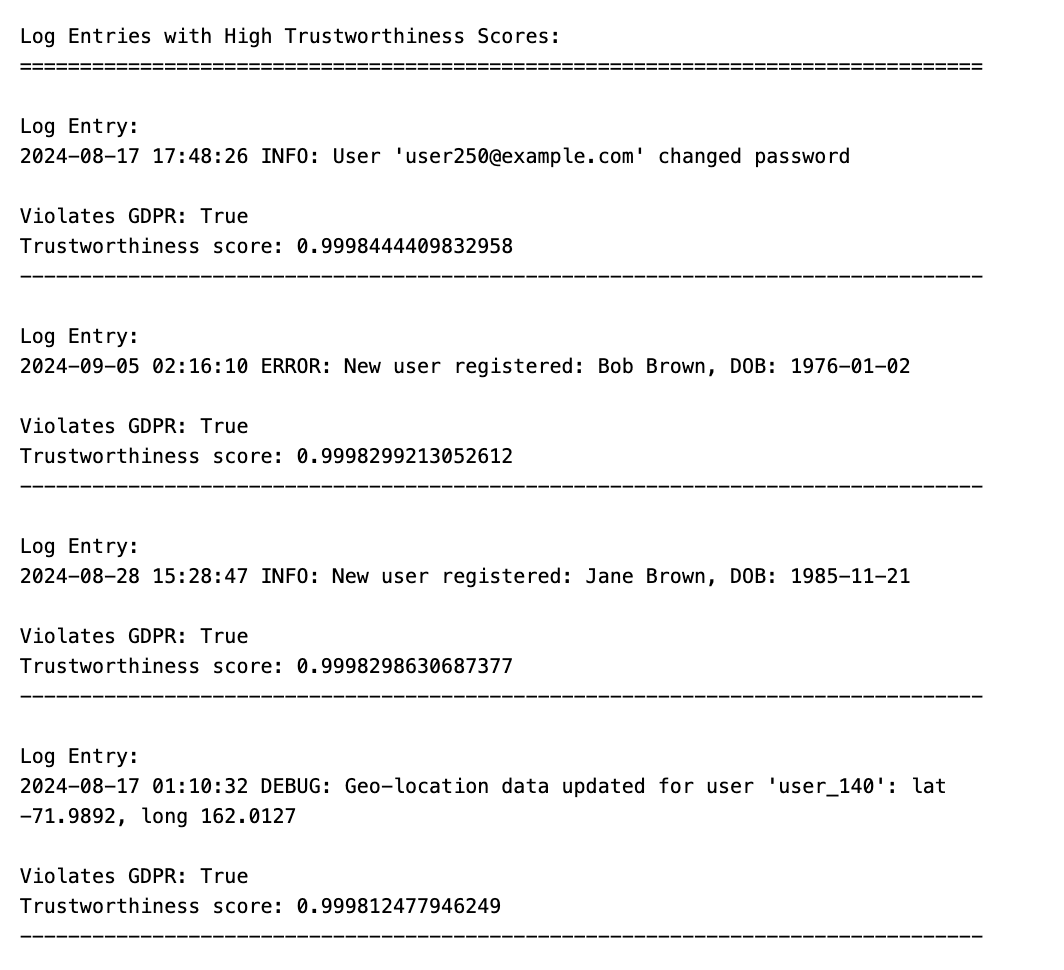

Most trustworthy LLM detections across our log dataset

Our analysis revealed several clear examples where TLM confidently identified GDPR violations with high trustworthiness scores:

These high-confidence detections demonstrate TLM’s ability to automatically identify clear GDPR violations at scale, particularly in cases involving email addresses, personal identifiers, and geolocation data.

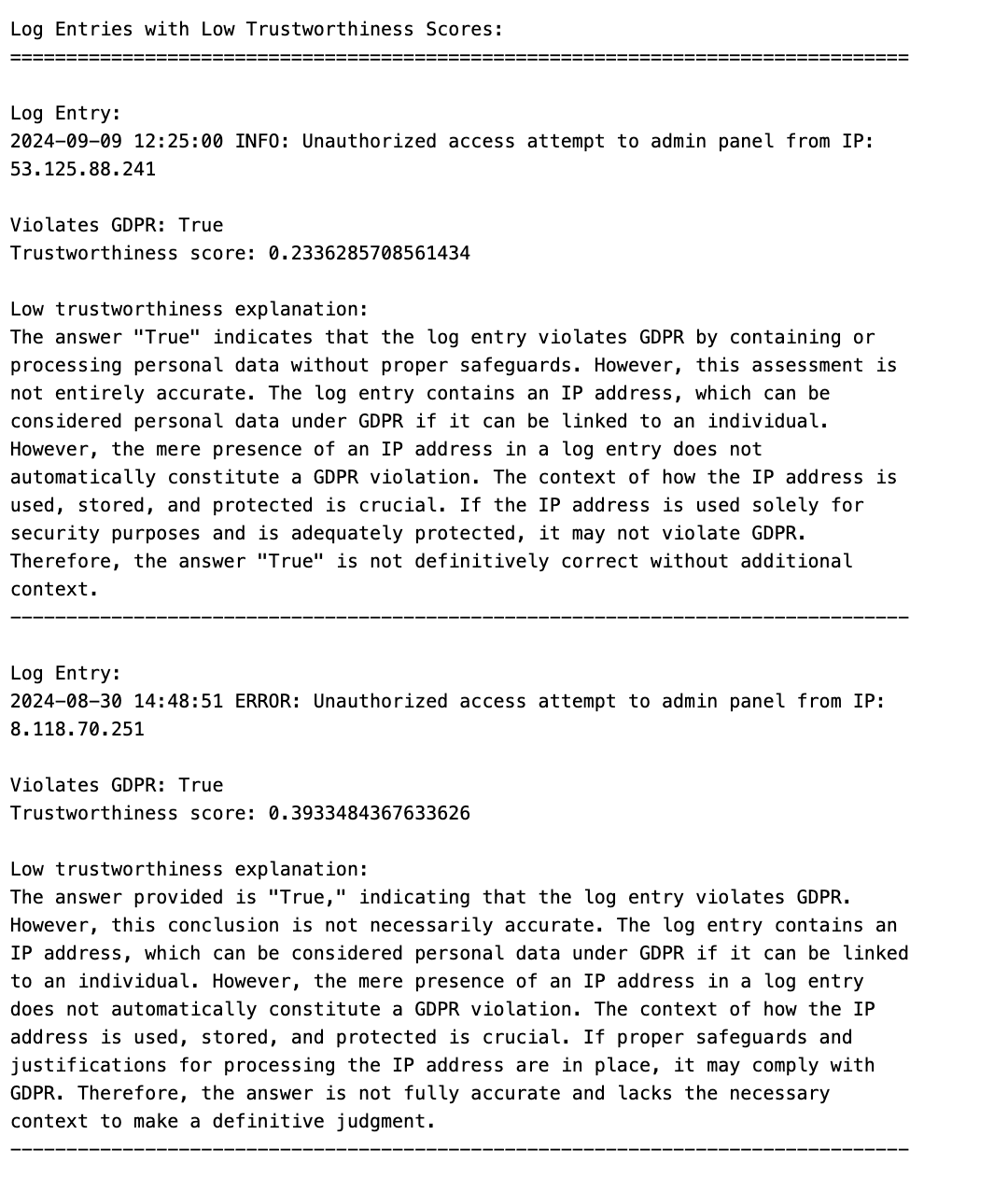

Least trustworthy LLM detections across our log dataset

While TLM is powerful, it’s important to remember that all LLMs are fundamentally brittle and will occasionally produce incorrect analyses when processing large datasets. This is where TLM’s trustworthiness scores become crucial - they automatically flag which analyses might be unreliable and require human review.

Here are some examples where TLM expressed low confidence in its analysis:

These low-trustworthiness cases primarily involve IP address logging in security contexts - a genuinely ambiguous area in GDPR compliance where human expertise is valuable. By automatically identifying these uncertain cases, TLM helps focus human review efforts where they’re most needed.

The Crucial Role of Trustworthiness Scores

TLM’s trustworthiness scores provide significant value beyond what standard LLMs offer:

-

Automatic error detection: While all LLMs can make mistakes, TLM automatically flags its own uncertain outputs, allowing teams to focus manual review efforts where they’re most needed.

-

Confidence-based prioritization: High trustworthiness scores allow teams to confidently automate responses to clear violations, while low scores highlight nuanced cases requiring human expertise.

-

Continuous improvement: By analyzing low-trustworthiness cases, teams can identify patterns and improve their data handling practices or refine the model’s prompts for better future performance.

-

Risk mitigation: In the context of GDPR compliance, where mistakes can be costly, the ability to quantify uncertainty adds an essential layer of risk management.

This unique feature of TLM enables a more efficient, reliable, and adaptable compliance checking process compared to using standard LLMs alone.

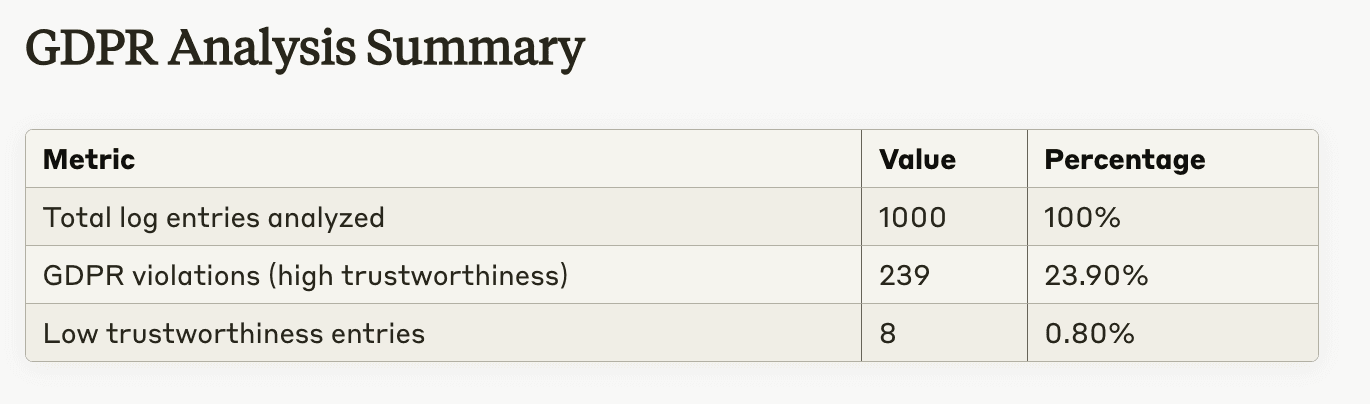

Generating Summary Statistics

Finally, let’s calculate some summary statistics and then save our results for further analysis:

Our analysis of 1000 enterprise log entries revealed significant GDPR compliance concerns:

-

Nearly a quarter of the logs (239 entries) contained clear GDPR violations, including exposed email addresses, personal identifiers, and unprotected geolocation data. These high-confidence detections highlight widespread compliance gaps requiring immediate remediation.

-

The analysis was highly decisive, with TLM expressing uncertainty in only 10 cases. These low-trustworthiness cases primarily involved IP address logging in security contexts, reflecting real-world ambiguity in how GDPR applies to security monitoring practices.

Key Advantages and Findings of Using TLM for GDPR Detection in Log Files

-

High-Confidence Detection and Accuracy:

- Successfully identified 23.90% of logs as GDPR violations with high confidence (trustworthiness scores > 0.95)

- Demonstrated strong contextual understanding across various data formats, including email addresses, user IDs, and geolocation data

- Correctly identified non-violating logs as GDPR-compliant, showing a low false positive rate

-

Efficient Human-in-the-Loop Workflow:

- Only 0.80% of entries flagged as low trustworthiness, primarily involving IP address logging

- Provided detailed explanations for uncertain cases, enabling focused human review, automatically prioritized by trustworthiness score

- Demonstrated consistent uncertainty handling in ambiguous scenarios, particularly with security-related IP logging

-

Enterprise-Ready Capabilities:

- Deploy TLM privately within your own Virtual Private Cloud (VPC) for maximum data security

- Process millions of diverse log entries efficiently at scale

Conclusion

This article demonstrated how TLM can assist enterprises in identifying potential GDPR violations within log files. By classifying log entries and providing trustworthiness scores, TLM enables automated and reliable compliance checking.

The results showed that TLM effectively flagged potential GDPR violations with high confidence, while also identifying edge-cases that require closer human inspection. This approach can significantly reduce manual effort in GDPR compliance while maintaining accuracy and reliability. Although we studied log files here, the same approach can be applied to text in many other formats (structured and unstructured).

For a deeper dive into the code and data used in this analysis, check out our accompanying notebook.

Beyond detecting compliance issues of your choosing, TLM is also useful for automating customized forms of PII detection. Get started with TLM via our API tutorial.