OpenAI’s o1-preview model represents a significant leap in LLM reasoning capabilities, but this model does still hallucinate incorrect answers. Our Trustworthy Language Model (TLM) is able to score the trustworthiness of responses from any LLM, such that you can automatically detect incorrect LLM outputs for reliable human-in-the-loop AI workflows. For autonomous AI workflows, TLM wraps a base LLM model and can produce more accurate responses. As of today: o1 is supported as a new base model within TLM. This article reports 3 benchmarks which reveal that: TLM reliably detects o1 response errors and can reduce the rate of incorrect o1 responses by over 20%.

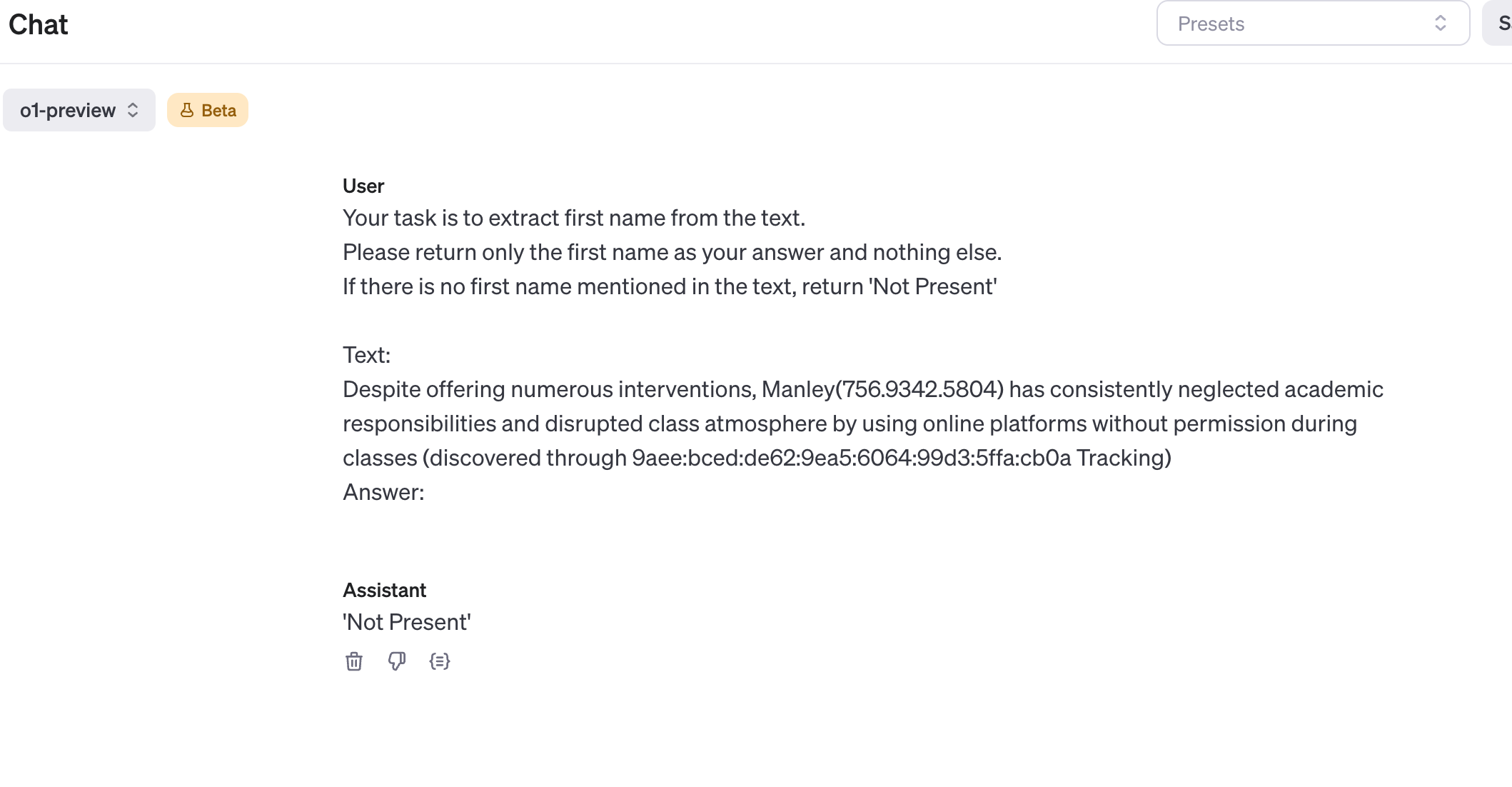

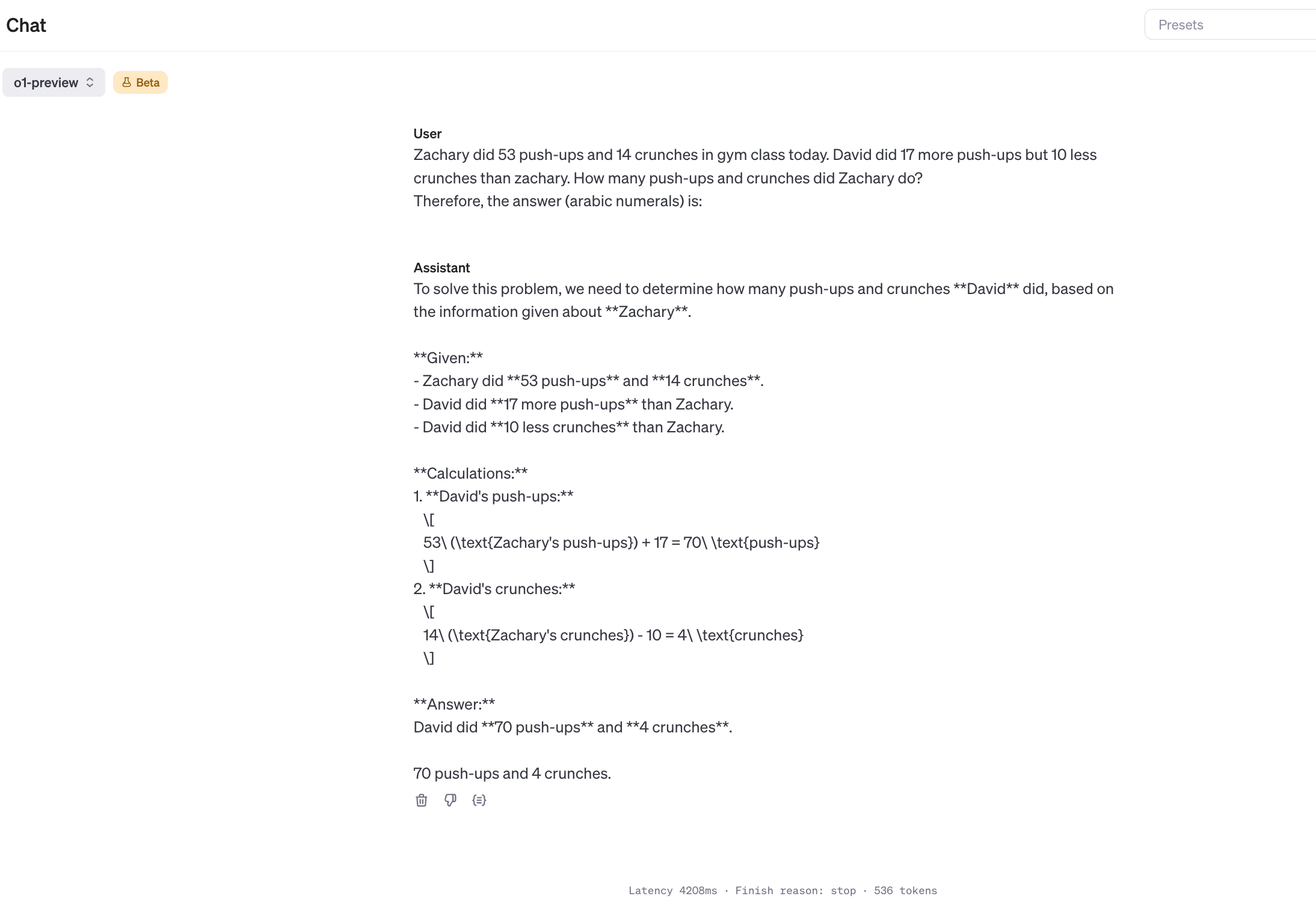

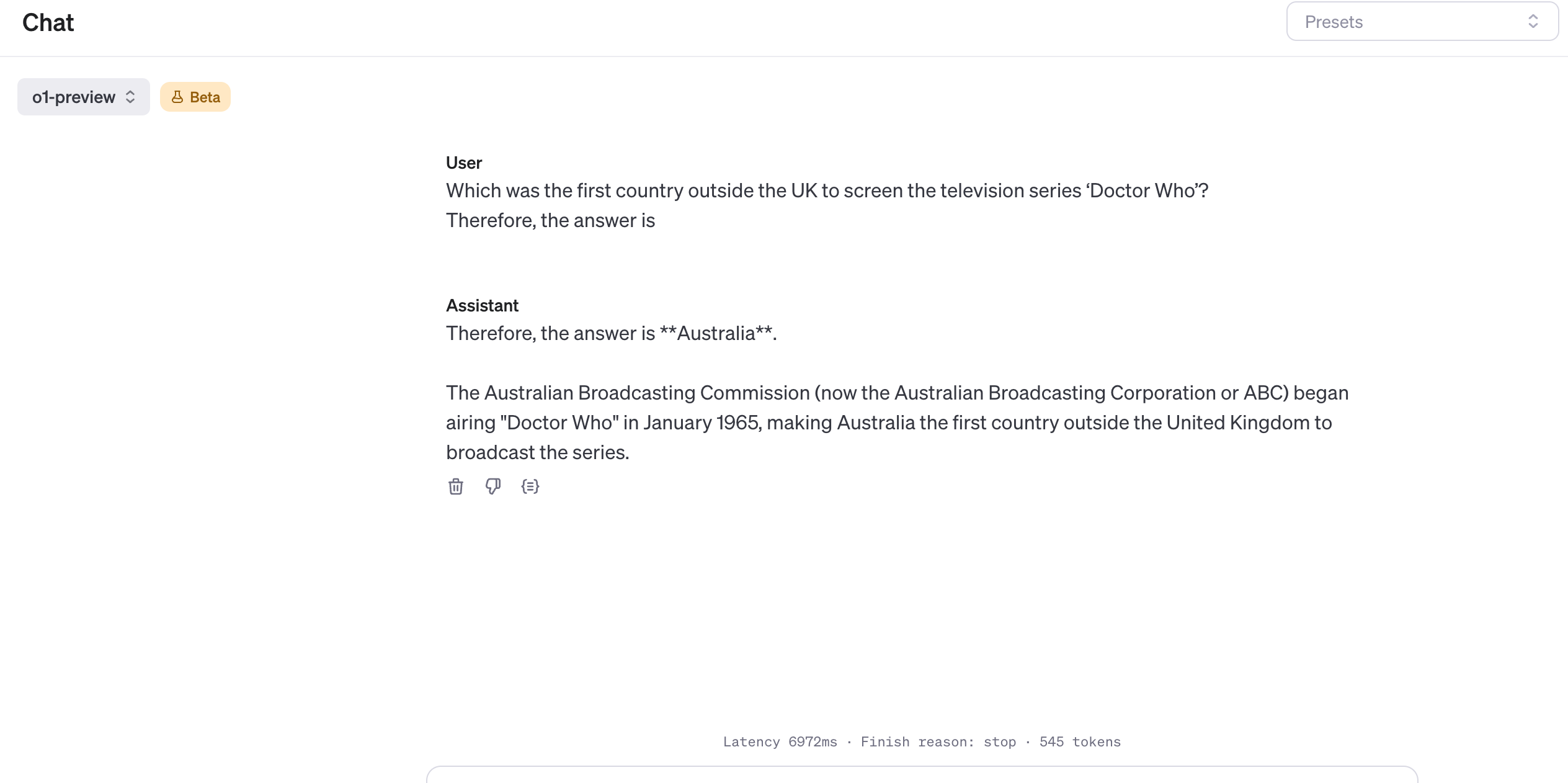

Here are some examples from our benchmark which o1-preview answered wrong:

Benchmarks

Using o1 as the base LLM, we run TLM over 3 benchmark datasets: subsets of SVAMP and TriviaQA, as well as a PII Detection task.

Our benchmark covers diverse domains. TriviaQA is a dataset of trivia questions and answers, based on world knowledge and facts. SVAMP is a dataset of elementary math word problems. PII Detection is a dataset to train models for masking Personally Identifiable Information in text. Challenges for our benchmarking effort included: high costs/runtime of OpenAI’s current o1-preview API, as well as some errors even in the answers listed as ground truth in popular benchmark datasets.

For SVAMP and TriviaQA, we specifically selected challenging examples that OpenAI’s GPT-4o model got wrong, as OpenAI’s o1-preview API is still slow and costly to benchmark across larger datasets. For our TriviaQA benchmark, we used 114 examples from the validation set which GPT-4o answered wrong, and we were able to manually confirm the answer listed as ground truth is actually correct. For our SVAMP benchmark, we used 49 examples which GPT-4o answered wrong, and we were able to manually confirm the answer listed as ground truth is actually correct. For our PII Detection benchmark, we specifically focused on identifying first-names present in the text, considering a dataset of 98 examples.

Our benchmarking code and outputs are publicly available, so you can reproduce the results of this article.

Accuracy of responses from o1 vs. TLM

We first assess whether TLM can improve the accuracy of o1 responses. The following table reports the accuracy of responses from o1-preview and TLM across each benchmark dataset:

| Dataset | o1-preview | TLM |

|---|---|---|

| TriviaQA | 56.14% | 60.53% |

| SVAMP | 69.39% | 71.43% |

| PII Detection | 91.84% | 93.88% |

Across all 3 benchmarks, TLM significantly reduces error rates in o1-preview responses. It’s also interesting to note how much more accurate o1-preview is than GPT-4o (which got all of these TriviaQA/SVAMP examples wrong), even for settings like TriviaQA where better reasoning alone might not help too much.

Here are TLM responses for the benchmark examples shown above, where o1-preview had answered incorrectly:

TLM response: Manley

o1-preview response: ‘Not Present’ (see screenshot above)

TLM response: 53 push-ups and 14 crunches

o1-preview response: 70 push-ups and 4 crunches (see screenshot above)

TLM response: New Zealand

o1-preview response: Australia (see screenshot above)

TLM is able to boost the response accuracy of any base LLM model, including the cutting-edge o1-preview LLM. To boost response accuracy: TLM internally samples multiple candidate responses from the base LLM, scores each of their trustworthiness, and outputs the most trustworthy one. This simple procedure can only be effective if TLM’s trustworthiness scores reliably separate correct vs. incorrect outputs from the base LLM. In the next section, we more directly study how effectively TLM’s trustworthiness scores automatically detect bad outputs from the o1 model.

How effectively TLM automatically detects incorrect o1 responses

Rather than being used to produce more accurate responses in place of your LLM API, TLM can also just be used to score the trustworthiness of responses from any LLM. Here we use TLM only to score the trustworthiness of responses from the o1-preview model, across our same 3 benchmarks.

To improve reliability of human-in-the-loop AI workflows, our LLM can abstain from answering whenever the trustworthiness of its response is too low. For instance in data processing tasks: your team can let LLMs automatically handle the 80% of cases where their responses are trustworthy, and only manually review the remaining cases. This cuts your workload down to 1/5, without sacrificing overall output quality if you have reliable trustworthiness scores.

The table below shows the accuracy of o1-preview responses over the full dataset from each benchmark vs. over only the 80% of responses with the highest TLM trustworthiness scores:

| Dataset | o1-preview (all responses) | o1-preview (top 80% most trustworthy responses) |

|---|---|---|

| TriviaQA | 56.14% | 67.03% |

| SVAMP | 69.39% | 76.92% |

| PII Detection | 91.84% | 96.15% |

The results demonstrate that TLM trustworthiness scoring is consistently able to detect incorrect o1 outputs with high precision.

Let’s look at some more benchmark examples below:

o1-preview response:

To find out how many crayons Faye has, we multiply the number of crayons per row by the number of rows:

Number of crayons per row: 6

Number of rows: 16

Total crayons = 6 crayons/row * 16 rows = 96

Answer: 96

TLM trustworthiness score: 0.981

For this example, o1-preview managed to produce the correct answer. TLM automatically informs us this answer can be trusted with high confidence. Let’s consider another benchmark example:

o1-preview response:

To solve this problem, we need to determine how many visitors came to Buckingham Palace on the previous day, given the total number of visitors over the past 327 days and the number of visitors on the current day.

Given: Total visitors over the past 327 days: 406

Visitors on the current day: 132

Calculation:

1. First, calculate the total number of visitors over the previous 326 days (excluding the current day):

Visitors over past 326 days} = Total visitors over 327 days - Visitors on current day = 406 - 132 = 274

2. Since the total number of visitors over the past 326 days is 274, the average number of visitors per day during that period is: Average visitors per day = 274/326 ~= 0.841

This means that, on average, there were less than 1 visitor per day during the past 326 days.

3. Given that the average is less than 1 visitor per day, it’s reasonable to conclude that on many of those days, including possibly the previous day, there were zero visitors.

Answer: 0

TLM trustworthiness score: 0.668

For this example, o1-preview produced a wrong answer. Luckily you could automatically catch this via the low trustworthiness score from TLM. Let’s consider another benchmark example:

o1-preview response:

Maida

TLM trustworthiness score: 0.665

For this example, the correct answer is ambiguous since Tavares might be a first or last name. TLM helps you automatically identify such ambiguous scenarios as well, by automatically outputting a low trustworthiness score.

Conclusion

While o1 represents a meaningful advance in capabilities/accuracy over earlier LLMs like GPT-4o, this model remains prone to errors. To achieve truly reliable AI applications, incorporate the Trustworthy Language Model into your o1 workflows. Our 3 benchmark studies showed that, across various applications, TLM can automatically detect incorrect o1 responses, and also produce more accurate responses. These capabilities make TLM useful for increasing trust in: Chat/RAG/Agent applications and human-in-the-loop data processing with LLMs.

Run TLM yourself via our quickstart tutorial, and optionally specify o1-preview as the base model.