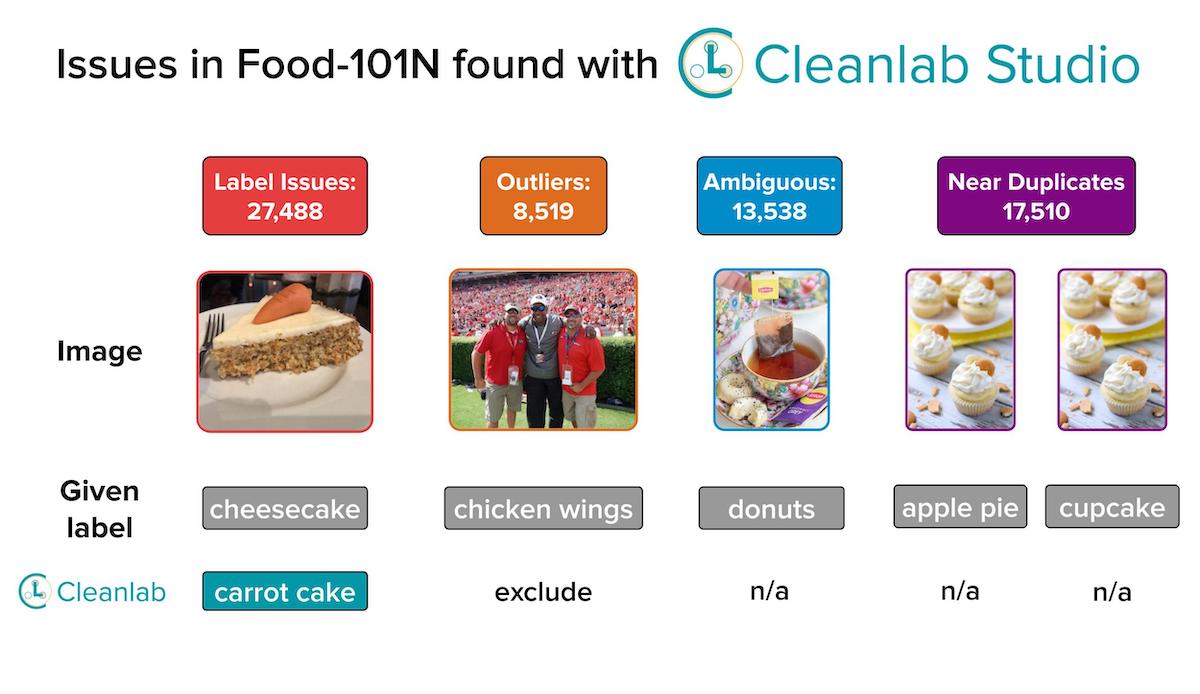

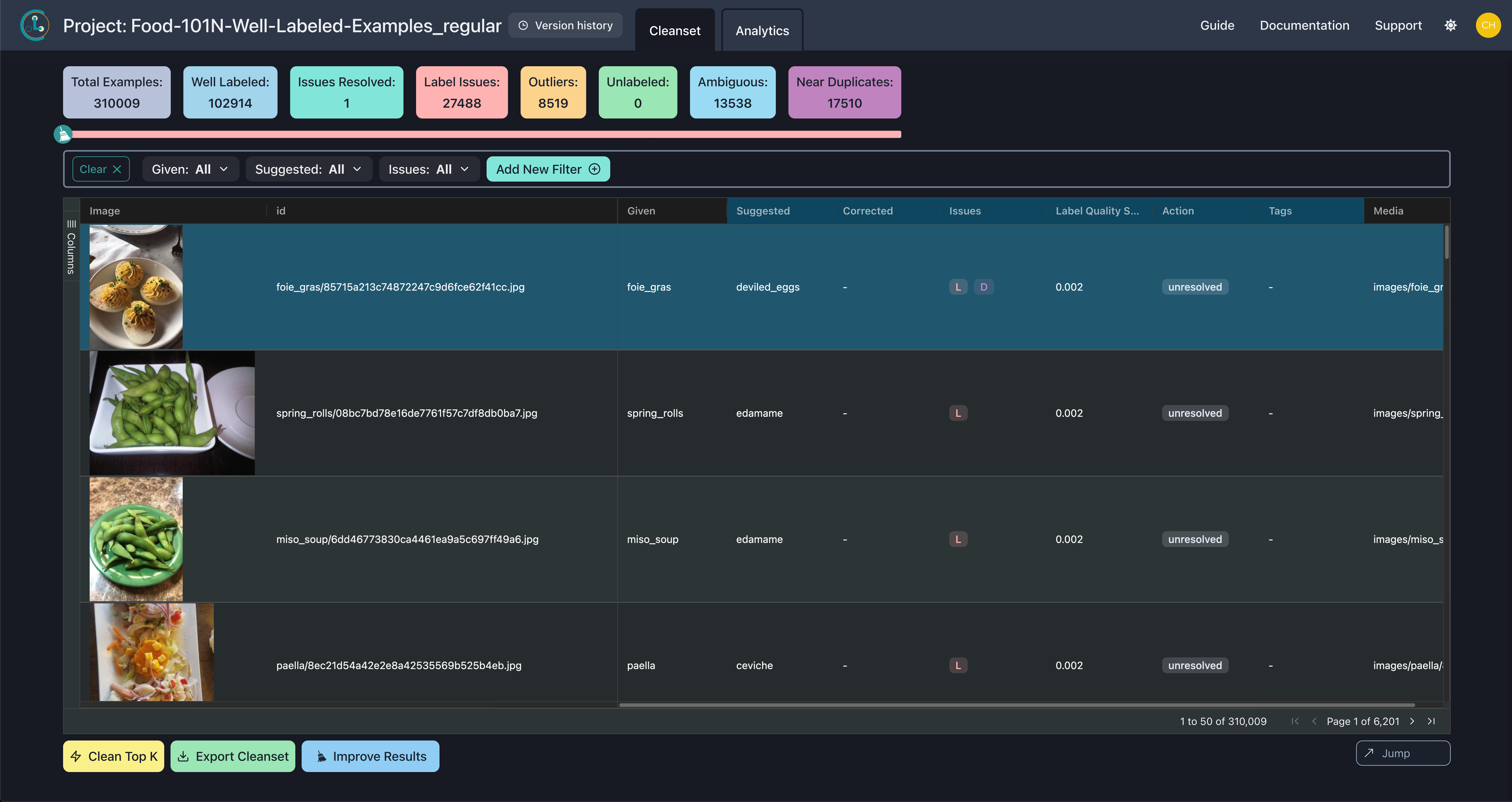

CSA? Like a PSA, the CSA (Cleanlab Studio Audit) is our way of informing the community about issues in popular datasets. To glean insights about popular datasets, we quickly run them through Cleanlab Studio — an automated solution to find and fix data issues using AI.

Here we consider the Food-101N dataset: a variant of the Food-101 dataset that has been used in over 400 publications. This dataset contains 101k images with 101 food categories such as carrot_cake , ice_cream , and waffles. We discovered thousands of label issues, outliers, ambiguous examples, and (near) duplicates in this famous computer vision dataset just by quickly running it through Cleanlab Studio.

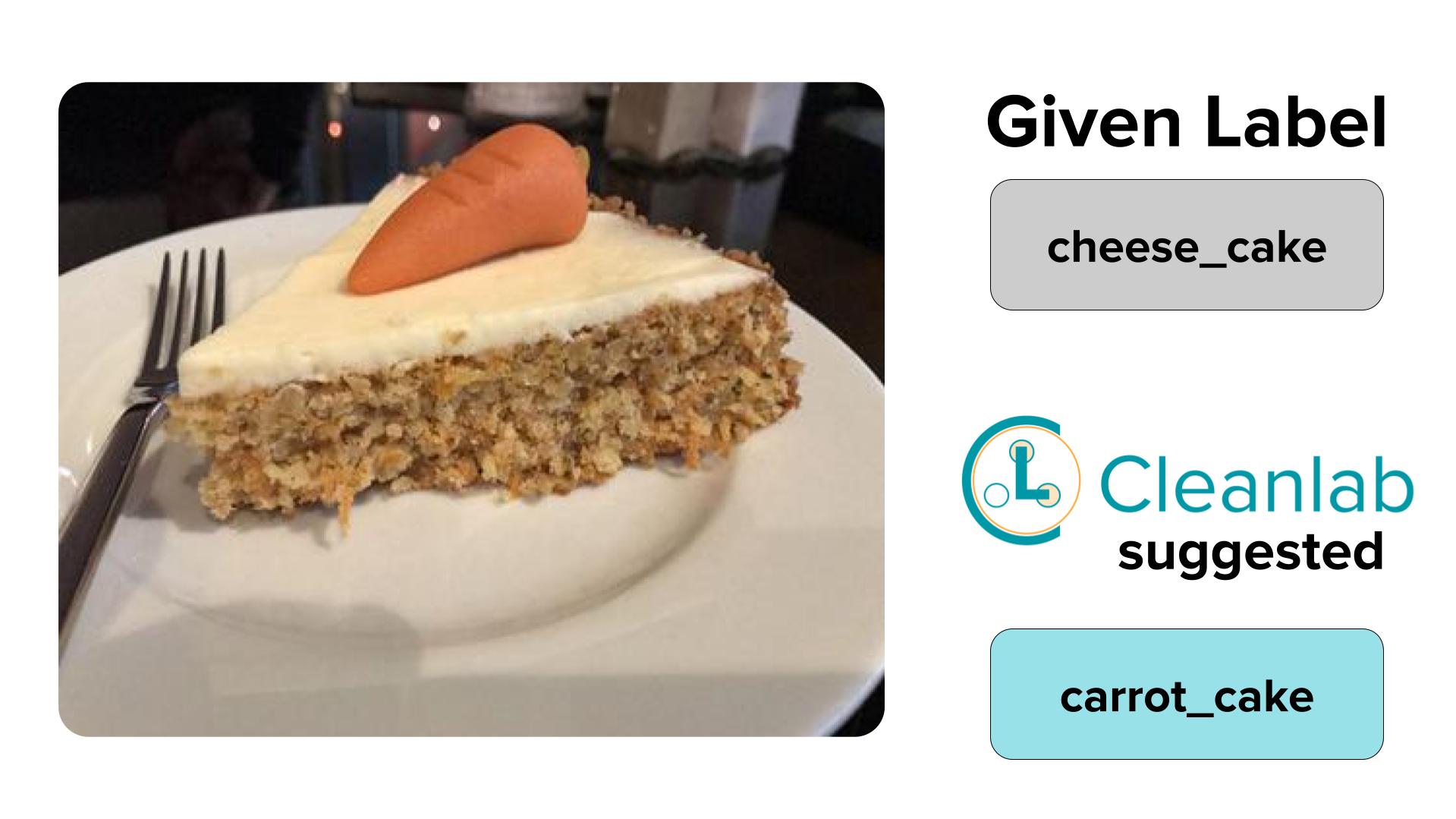

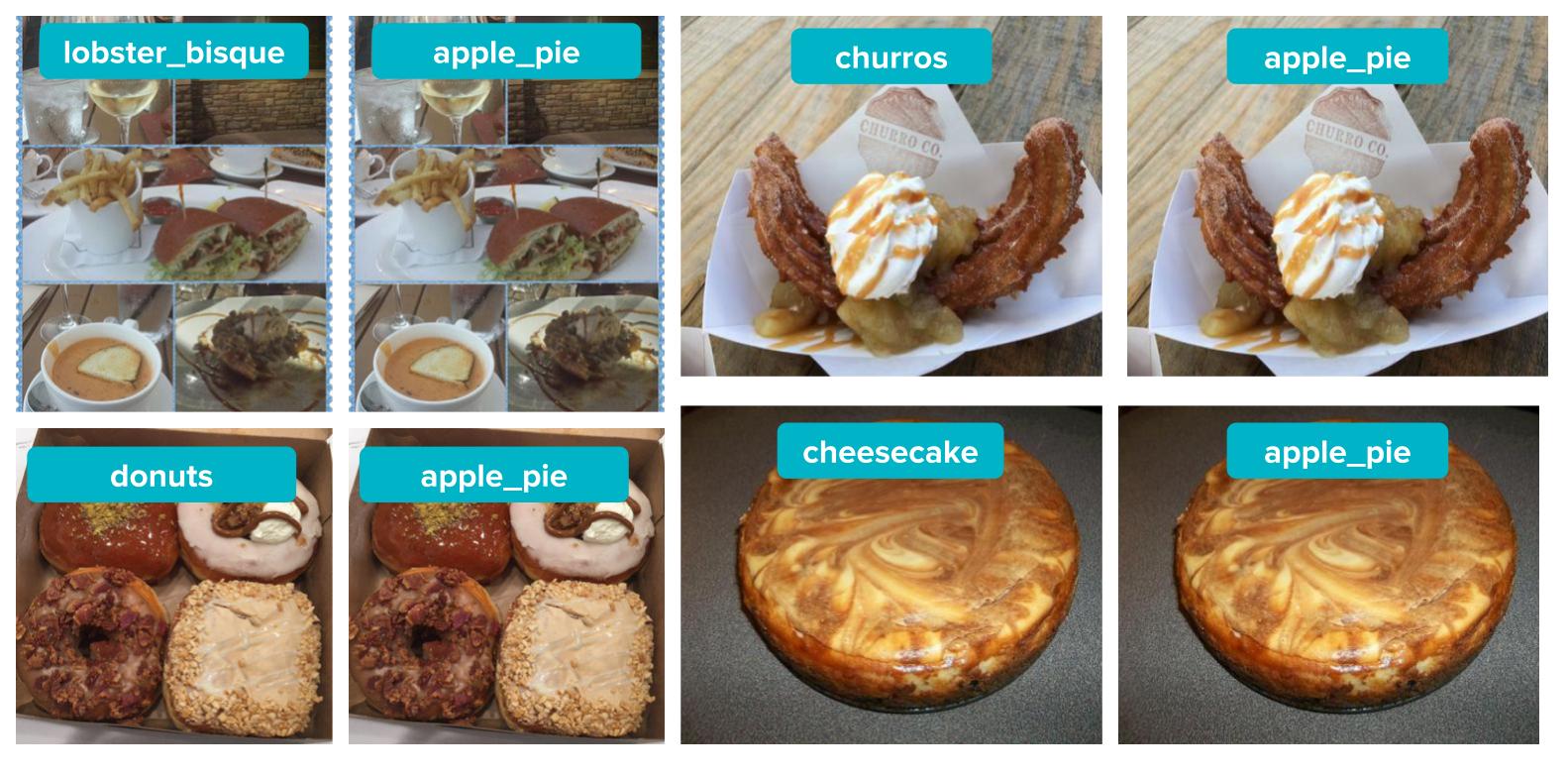

Above we see one example of an image that Cleanlab Studio automatically identified as mislabeled (while also suggesting a more appropriate label to use instead). This food item was labeled as a piece of cheesecake when clearly it is a piece of carrot cake. The video below displays the top detected label issues in Cleanlab Studio’s web interface – you can see that the Given label in the original data for most of these images in incorrect and Cleanlab Studio has automatically Suggested a more appropriate label to consider for these images.

The authors of this dataset noted that “[Food-101N]…has [many] more images and is more noisy…” than the original Food-101 dataset. While this disclaimer is a step in the right direction, Cleanlab Studio provides you with certainty regarding your dataset’s quality and equips you with the tools to address these issues if necessary. Interestingly, the Food101-N dataset authors did not mention occurrences of outliers, ambiguous instances, or duplicate examples in their disclaimer, which, as we will later demonstrate, are noticeably prevalent in the dataset.

27,488 Mislabeled Examples Found

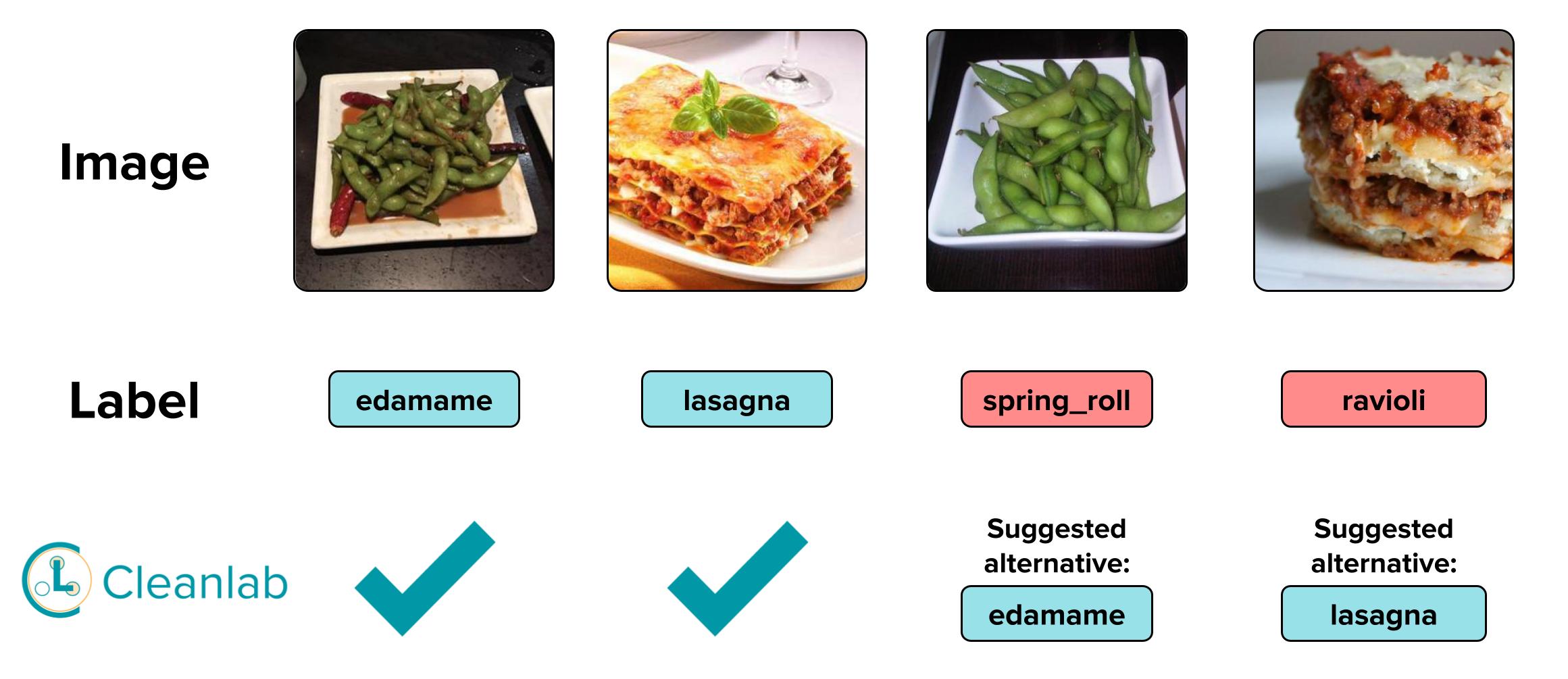

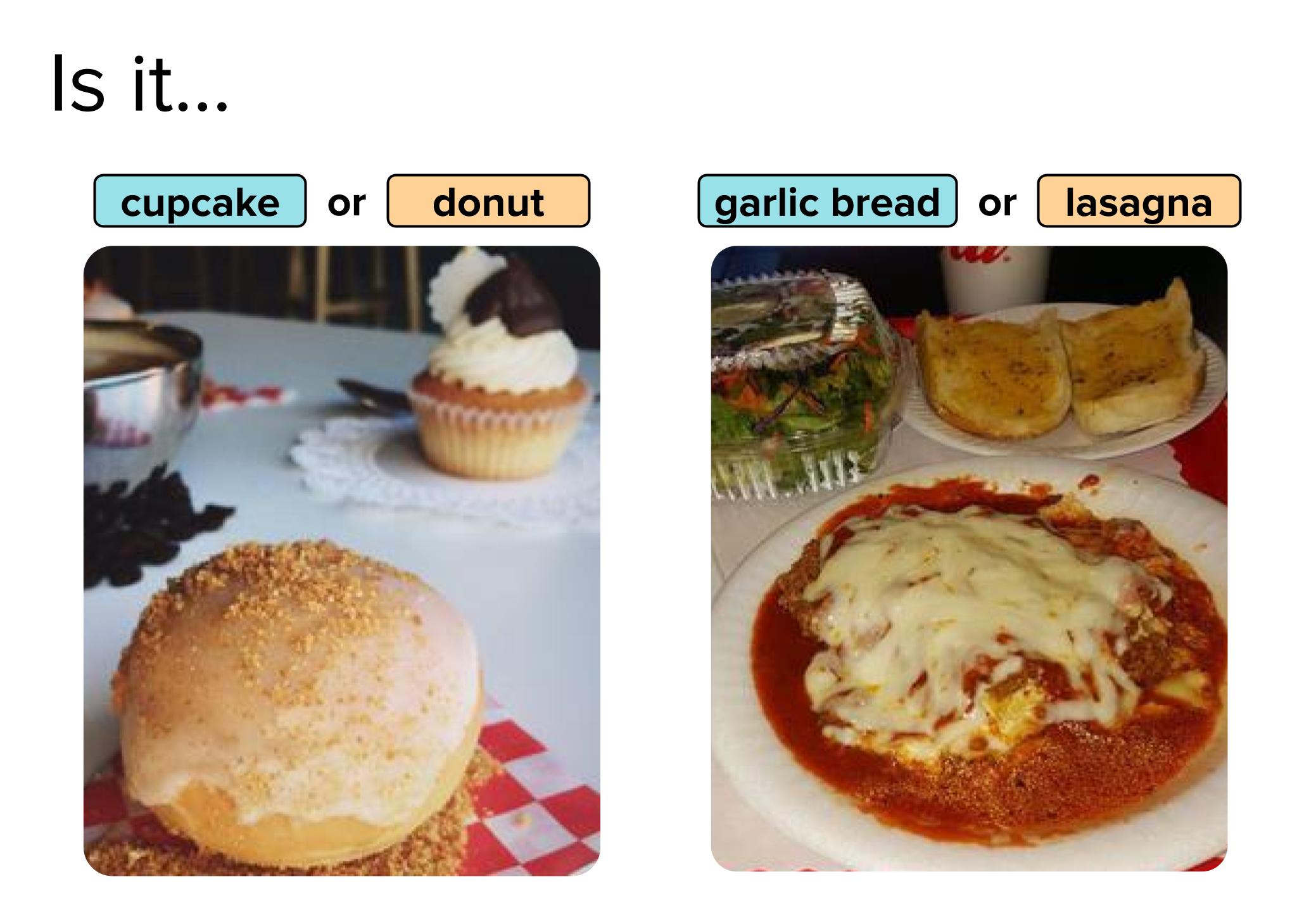

Cleanlab Studio automatically found thousands of examples that were incorrectly labeled and suggested the correct labels. Below we show two examples of food items that are labeled wrong.

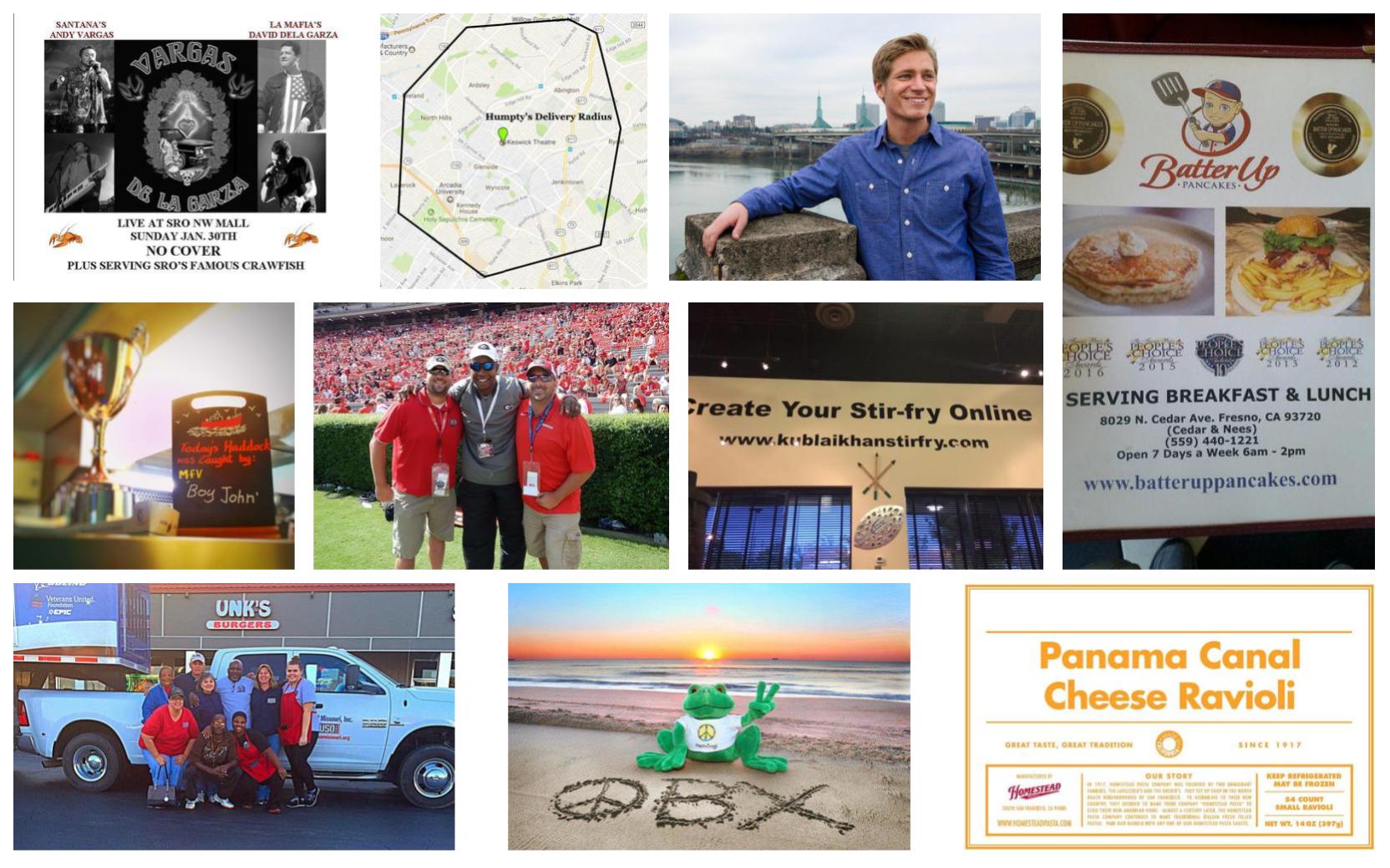

8,519 Outliers Found

Sometimes images should be removed from the dataset entirely as they do not belong to any of the target classes. Below are some examples Cleanlab Studio automatically detected as outliers that cannot be correctly labeled as any of the classes and should be removed from the dataset entirely.

13,538 Ambiguous Examples Found

Cleanlab Studio also found many images that are ambiguous. These are images where the true label is unclear as there could be more than one correct label or no label perfectly applies. You can see below two examples of ambiguous images.

17,510 (Near) Duplicate Examples Found

Cleanlab Studio found many duplicate images, where one image was labeled correctly and its duplicate was not. Duplicate images with different labels could cause problems.

Fix data with Cleanlab Studio

These data errors detected by the AI in Cleanlab Studio are detrimental to your modeling and analytics efforts. It’s important to know about such errors in your data and correct them in order to train the best models and draw the most accurate conclusions. To find & fix such issues in almost any dataset (text, image, table/CSV/Excel, etc), just run it through Cleanlab Studio!

Want to get featured?

If you’ve found interesting issues in any dataset, your findings can be featured in future CSAs if you want to share them with the community! Just fill out this form.