Content moderation is critical to ensure user-posted content on online platforms complies with established guidelines and community/safety standards. The volume of content generated daily makes manual moderation impractical. Thus platforms today rely on automated content moderation, although many of the systems in use today only detect limited types of issues. Here we demonstrate how Cleanlab Studio, an AI-powered data curation tool, can automatically flag all sorts of potentially problematic text content, enabling expansive content moderation that not only ensures safety and privacy, but also the quality of your content.

Amazon Magazine Reviews

To showcase the variety of text issues that Cleanlab Studio can automatically detect, this article considers an Amazon Reviews dataset, which contains customer reviews for various magazines.

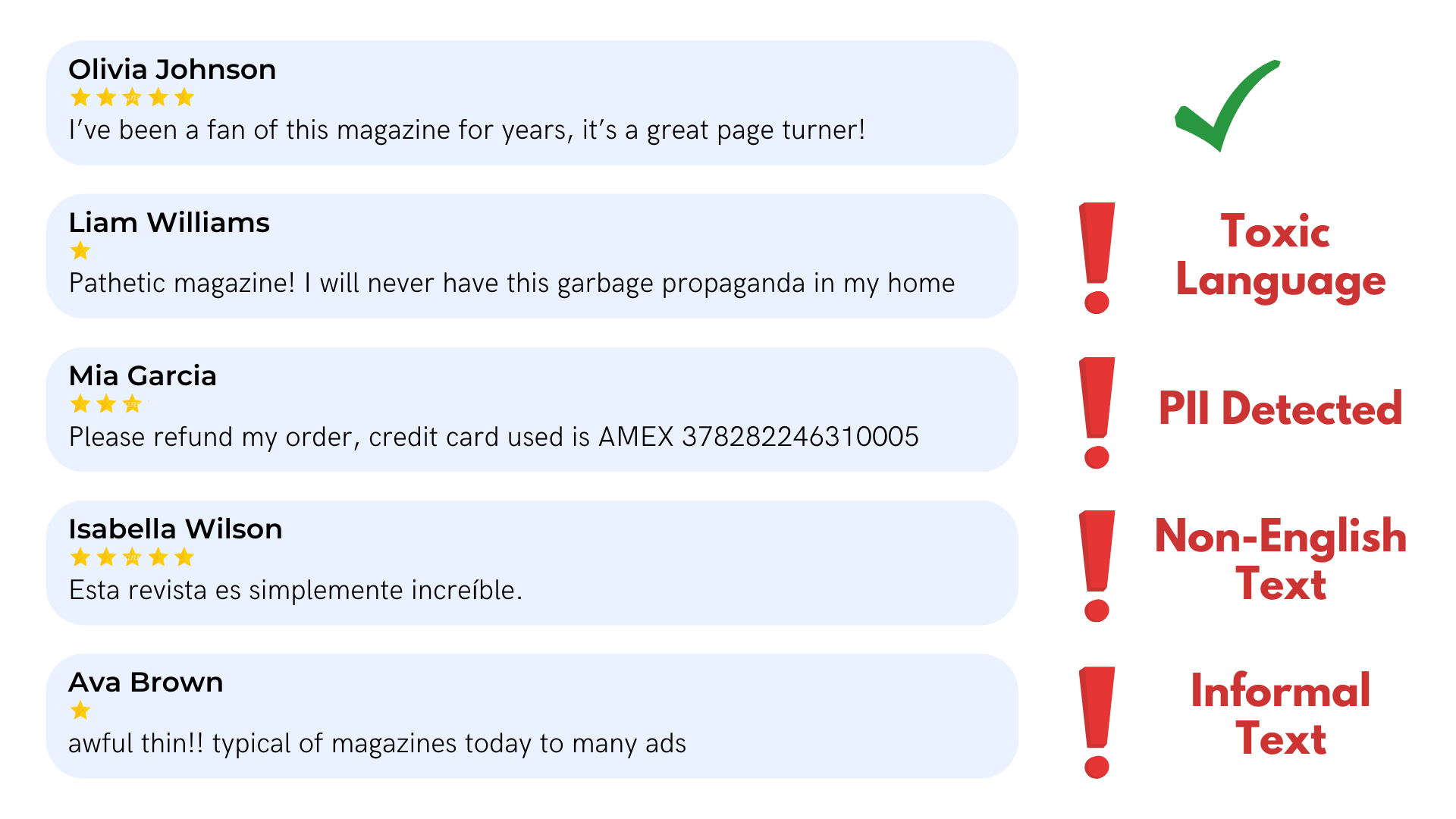

Here are a few sample reviews from that dataset:

This is a wonderful magazine for Women over 40 years old. Great beauty tips and other info for our age group.

It was ok, not that great.

It’s just okay. Don’t expect much when you order it through Amazon for cheap - pretty sure it’s about half of their regular issue size.

The Economist is the best single periodical for keeping up with current events across politics, international affairs, science, economics and more.

These reviews don’t sound any alarm bells, but it’s hard to know whether there are other more problematic reviews in this dataset. We can easily run this dataset through Cleanlab Studio which automatically flags reviews containing various forms of problematic text. Note that Cleanlab Studio is a comprehensive data quality platform – beyond the text issues highlighted in this article, it can additionally detect all sorts of other issues in this dataset such as mislabeled/miscategorized text, anomalous text, nearly duplicated text, ambiguous text, … Here we will just focus on the content-related quality checks availble in this tool. While other software exists for diagnosing some of these issues in text data, no other tool can comprehensively detects as many important types of problems (or as accurately, all without you having to write a single line of code).

Text Issues Flagged by Cleanlab Studio

Toxic Language

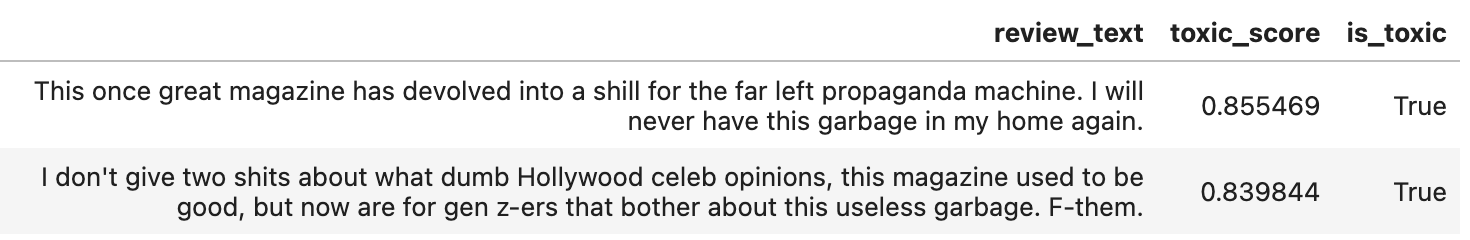

Within a large text dataset, Cleanlab Studio can auto-detect language that is offensive, harmful, aggressive, or derogatory towards others. Such toxic language can create hostile environments and promote cyberbullying/harassment, so it is critical to catch early. Here are a couple reviews in our Amazon Reviews dataset that have been automatically flagged by Cleanlab Studio as containing toxic language:

These reviews contain derogatory language and profanities, as well as some harsh criticisms — content that may not be appropriate in your platform. Note that each review receives a numeric toxic_score which quantifies how severely toxic the corresponding text is estimated to be. You can choose to threshold these scores at a different level to flag less/more of the text as toxic, depending on your needs.

By automatically flagging such harmful content with the help of Cleanlab Studio, you can ensure a safer and more inclusive environment for your users.

Personally Identifiable Information (PII)

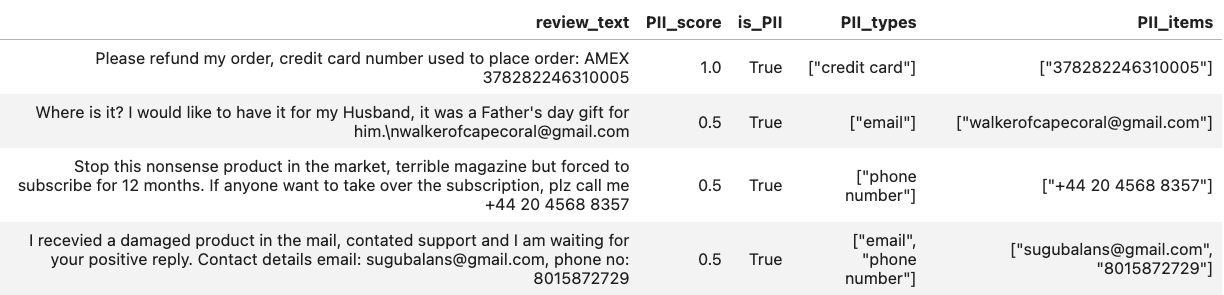

Many text datasets in companies contain Personally Identifiable Information (PII) that could be used to identify an individual or is otherwise sensitive. Safeguarding the PII of your users/customers and ensuring it is not leaked in public content is critical to the long-term success of your business.

Let’s take a look at some PII that Cleanlab Studio has automatically flagged in this Amazon Reviews dataset:

Cleanlab Studio automatically discovered some concerning PII in the reviews — someone has publicly shared their credit card number! That sensitive information should definitely not be disclosed in a review on a public website. With an automated tool such as Cleanlab Studio, platforms can quickly identify these pieces of PII or sensitive information, and take the appropriate actions to protect the security of their users. Each type of detected PII receives a particular score, with more sensitive forms of PII (like credit card numbers) receiving higher scores than less sensitive forms of PII (like emails or phone numbers).

Non-English Detection

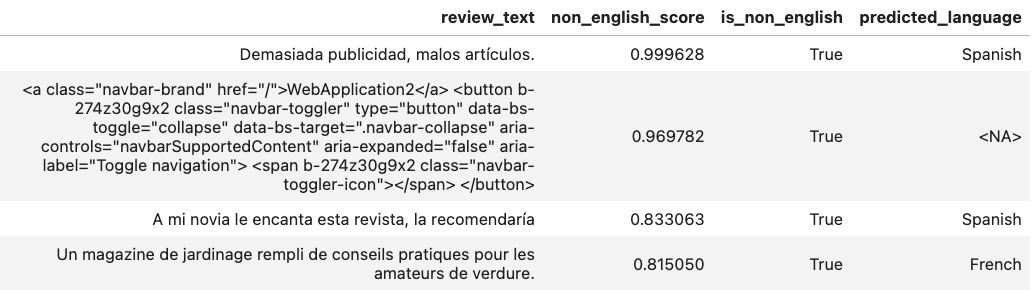

When browsing content that is predominantly in English (such as Amazon), users may be confused by reviews/comments in foreign languages. If undiagnosed, such language inconsistency can also hamper data analysis efforts. The user experience is particularly harmed in many content catalogs where description fields that are supposed to contain informative language are contaminated by unreadable non-language strings (such as HTML/XML tags, identifiers, random characters). Thankfully, Cleanlab Studio can also automatically flag any text that does not resemble the English language. Let’s see the examples of this detected in our Amazon Reviews dataset:

The flagged reviews are mostly written in foreign languages such as Spanish or French, and one contains nonsensical HTML tags. Each text example in the dataset is scored based on how little it resembles readable English language. With this shortlist of non-English text readily identified, you can focus your efforts on how to handle this content to improve your users’ experience. For instance, foreign language text could be relegated to a separate section, while nonsensical text (such as the HTML tags) might be hidden or deprioritized.

Language Formality and Properness

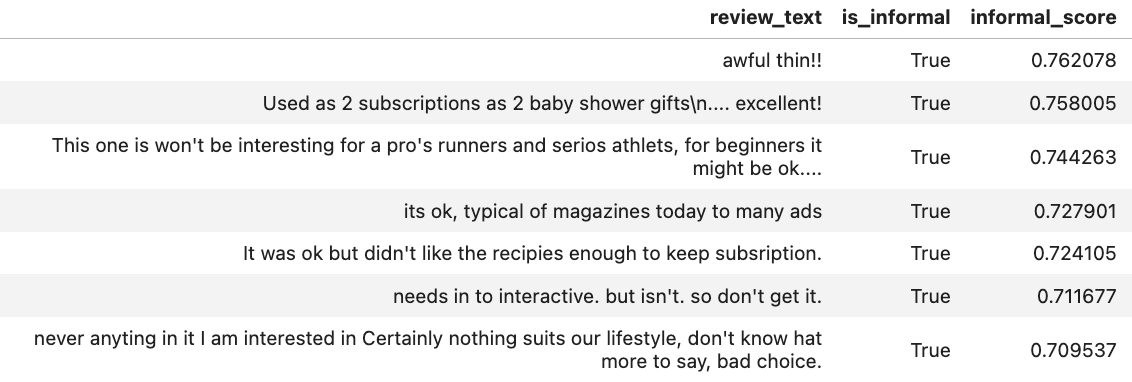

User-generated text content frequently often features slang, casual language, and grammar/spelling mistakes. While it may resemble English and be semantically meaningful, such language may be less desirable in certain applications (for instance if curating a training set for Generative AI). Luckily Cleanlab Studio can automatically detect and rank how formal any text is. Here we show the most Informal Text that Cleanlab Studio has identified in our Amazon Reviews dataset:

We see how some of the reviews above can be tough to comprehend due to the poor grammar or phrasing of the text. Unlike many formality scoring algorithms from the NLP research literature, Cleanlab Studio’s scores naturally consider the degree of spelling/grammar mistakes, in addtion to the types of words/syntax used. This helps you better curate documents/content in a unified way that considers all possible shortcomings in the writing style.

How to Automatically Get These Results from Cleanlab Studio

Below we show how to load any text dataset and start a project to analyze our text data within less than a minute.

Once complete, Cleanlab Studio’s automated audit provides two types of metadata for each issue type:

-

A True/False flag which indicates if the given text contains the specified issue.

-

A numeric score between 0-1 which indicates the severity of the specified issue, where 1 indicates the most severe case of an issue.

For instance, a text excerpt containing foul language would likely have a True is_toxic flag and a high toxic_score, as seen in the results above.

Conclusion

We can leverage Cleanlab Studio as a comphrensive solution to perform content moderation and curation on large amounts of text data (in a no-code manner). By simplifying the identification process of problematic content on your platform, you can instead focus on rectifying undesirable content and behavior. This ultimately saves you time, while simultaneously enhancing the safety and experience of your users/customers.

Resources

- Automatically find and fix issues your text, image, and tabular datasets with Cleanlab Studio.

- Learn also how to programmatically auto-detect these issues and many others (mislabeling, outliers, near duplicates, drift, …) in any text dataset via Cleanlab Studio’s Python API.

- Follow us on LinkedIn or Twitter to stay up-to-date on the best methods to ensure data and content quality.