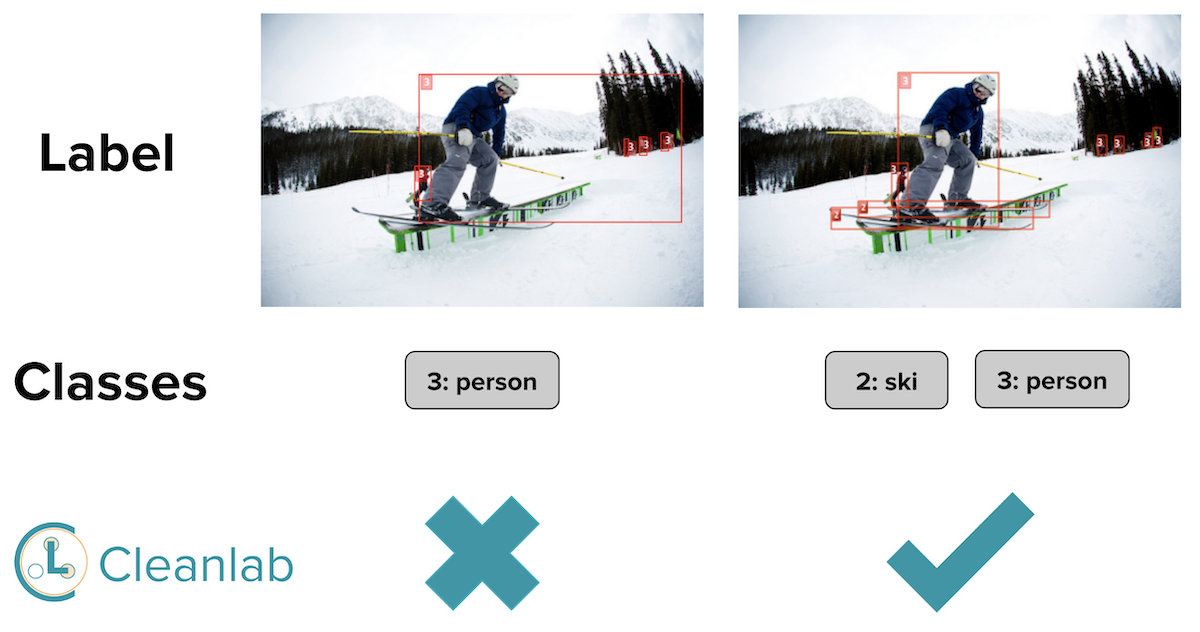

Introducing Cleanlab Object Detection: a novel algorithm to detect annotation errors and assess the quality of labels in any object detection dataset. We’ve open-sourced this in the cleanlab library. When applied to the famous COCO 2017 dataset, cleanlab automatically flags images like these shown below, whose original labels (red bounding boxes) are clearly incorrect.

When drawing bounding boxes, annotators of real-world object detection datasets make many types of mistakes including:

- overlooking objects that should’ve been annotated with a box (like the fire hydrant above, as this is one of the classes in COCO 2017),

- sloppily drawing boxes in bad locations (like the broccoli image above, in which the bounding box does not include the broccoli head),

- mistakenly swapping the class label for a box with an incorrect choice of class (like the bathroom image above, in which a bounding box was correctly drawn around the sink but its class label is wrong).

Able to automatically catch such diverse errors, our algorithm utilizes any existing trained object detection model to score the label quality of each image. This way mislabeled images can be prioritized for label review/correction, to prevent such data errors affecting models in high-stakes applications like autonomous vehicles and security.

How Cleanlab Object Detection works

We call our new algorithm ObjectLab for short. Full mathematical details and benchmarks of its effectiveness are presented in our paper:

ObjectLab: Automated Diagnosis of Mislabeled Images in Object Detection Data.

Here we provide some basic intuition. Besides the original data annotations (bounding boxes around objects in each image and associated class labels), the only input ObjectLab requires is predictions of these boxes/classes from any standard object detection model (you can use any model already trained on your dataset). A key question ObjectLab answers is: when to trust the model predictions over the original data annotations? This is addressed by considering the estimated confidence of each prediction (e.g. class probability), how accurate the model is overall, and whether the deviations between a prediction and original label match specific patterns of mistakes made by data annotators of real datasets. In particular, ObjectLab uses the deviation between prediction and label to compute three scores for each image which reflect the likelihood of each of the three common types of annotation errors listed above (overlooked boxes, badly located boxes, and swapped class labels). The overall label quality score for the image is a simple geometric mean of these 3 error-subtype scores.

For instance, if the model confidently predicts a bounding box where there is none annotated nearby and the model overall tends to accurately identify boxes of this predicted class, this provides strong evidence that annotators overlooked a box that should’ve been drawn for this image. Such errors may be critical to catch – models deployed in self-driving cars should not be trained on images depicting pedestrians that were overlooked by annotators.

Detected annotation issues in COCO 2017

When we sort the COCO 2017 images by their overlooked-error subtype score (computed using a standard Detectron2-X101 model), here are some of the top images (original annotation in red on left) and their corresponding model predictions (in blue on right).

When we similarly sort the COCO 2017 images by their badly-located-error subtype score, here are some of the top images (original annotation in red) and their corresponding predictions from the same model (in blue).

Above in each image on the left, some annotated bounding boxes (the dining table, person, and train) are clearly badly located. Note that model predictions (on right) are not perfect, yet Cleanlab Object Detection still detected these images with badly located bounding boxes.

When we similarly sort the COCO 2017 images by their swapped-class-label-error subtype score, here are some of the top images (original annotation in red) and their corresponding predictions from the same model (in blue).

In the first image, the doughnut on the left was correctly localized but incorrecrtly annotated as a cake.

Inspecting other images with the worst Cleanlab label quality scores in the COCO dataset reveals fundamental inconsistencies in the data annotations shown below.

Here are animals annotated as both cow and sheep.

Printed photos of people are annotated as person in some images but not others.

Sofas are annotated as chair objects in some images (e.g. the one on left) but not others (e.g. the one on right below).

These are just a few of the many inconsistencies Cleanlab Object Detection reveals in the COCO 2017 annotations. Other detected inconsistencies include: miscellaneous street signs annotated as stop signs, cows as horses, helicopters as airplanes, as well as confusion related to food vs. the dining table it’s placed on and whether objects seen in reflections should be localized. Such inconsistencies indicate certain annotation instructions may require further clarification.

Overall, Cleanlab Object Detection detected hundreds of images we verified to have annotation errors in COCO 2017. By using such algorithmic data validation, you can avoid training models with erroneous data and save your annotation Quality Assurance team significant time by narrowing down the subset of data for review to only the likely-mislabeled examples.

Cleanlab Object Detection outperforms other label quality scoring methods

Our paper benchmarks our ObjectLab algorithm against other approaches to score label quality in object detection data, including: CLOD, simply using the mAP score between prediction and original label (as in typical error analysis), and a tile-estimates approach that partitions each image into a grid of tiles and applies popular techniques for label error detection in classification data to the tiles. We evaluate these methods when applied with two standard object detection models (Detectron2-X101 and Faster-RCNN) in two datasets where we a priori know exactly which images are mislabeled: COCO-bench (a subset of COCO 2017 with labels reviewed by multiple annotators), and SYNTHIA (a synthetic graphics engine dataset in which we introduced diverse types of mislabeling).

The barplot below evaluates each scoring method based on how well it ranks mislabeled images above those which are properly annotated, quantified via standard information retrieval metrics: Average Precision, and Precision @ K for K = 100 or equal to the true number of mislabeled images (i.e. errors) in the dataset.

Across both datasets and object detection models, ObjectLab detects mislabeled images with better precision/recall than alternative label quality scores.

Consider the following two COCO images whose original annotation is shown on the left (in red) and prediction from our Detectron2-X101 model is shown on the right (in blue). For the top image, the model incorrectly predicts the hairbrush is a zebra with moderate confidence. For the bottom image, the model fails to localize a sports ball.

Neither image is mislabeled. However, both images receive extremely bad mAP scores because the model predictions are so different from the original annotation, and thus are false positives when mAP is used to detect annotation errors. In contrast, ObjectLab explicitly accounts for model confidence and specfic forms of errors expected in practice. Thus even with these imperfect model predictions, ObjectLab does not falsely flag these images, assigning both a benign label quality score.

Cleanlab Object Detection is easy to use

After training any standard object detection model on your dataset, use it to produce predictions for all images of interest (i.e. predicted: bounding boxes, class labels, and confidence in these). Ideally these predictions should be out-of-sample, e.g. produced via cross-validation.

Based on these predictions and the original dataset labels (i.e. annotated: bounding boxes and class labels for each image), cleanlab can score the label quality of each image via a single line of code:

You can also directly estimate which images are mislabeled via another line of code using: cleanlab.object_detection.filter.find_label_issues()

Resources to learn more

- Quickstart Tutorial - Start using Cleanlab in 5 min on your own object detection data

- Example Object Detection Model Training Notebook - Fit a model on your own dataset

- GitHub Repo - Check out how the algorithm works for yourself 🔍

- Cleanlab Studio - A no-code solution to automatically find and fix such issues in image datasets (as well as in text/tabular data)

- Slack Community - Join our community of scientists/engineers to see how others are handling noisy object detection data and discuss ideas about data-centric AI