To generate useful image datasets, we explore the art of prompt engineering, using a real-world photograph dataset as our compass. Our experiments with Stable Diffusion show the complexity of generating diverse and convincing images that mimic real-world scenarios. Because it can be hard to manually determine when certain prompt modifications improve our synthetic dataset or make it worse, we introduce a quantitative framework to score the quality of any synthetic dataset. This article demonstrates step by step how these quality scores can guide prompt engineering efforts to generate better synthetic datasets. The same systematic evaluation can also help you optimize other aspects of synthetic data generators.

Evaluating the Quality of Synthetic Datasets

After generating synthetic data from any prompt, you’ll want to know the strengths/weaknesses of your synthetic dataset. While you can get an idea by simply looking through the generated samples one by one, this is laborious and not systematic. Cleanlab Studio offers an automated way to quantitatively assess the quality of your synthetic dataset. When you provide both real data and synthetic data that is supposed to augment it, this tool computes four scores that contrast your synthetic vs. real data along different aspects:

-

Unrealistic: This score measures how indistinguishable the synthetic data appears from real data. High values indicate there are many unrealistic-looking synthetic samples which are obviously fake. Mathematically, this score is computed as 1 minus the mean Cleanlab label issue score of all synthetic images in a joint dataset with binary labels

realorsynthetic. -

Unrepresentative: This score measures how well represented the real data is amongst the synthetic data samples. High values indicate there may exist tails of the real data distribution (or rare events) that the distribution of synthetic samples fails to capture. Mathematically, this score is computed as 1 minus the mean Cleanlab label issue score of all real images in a joint dataset with binary labels

realorsynthetic. -

Unvaried: This score measures how much variety there is among synthetic samples. High values indicate an overly repetitive synthetic data generator that produced many samples which all look similar to one another. Mathematically, this score is computed as the proportion of the synthetic samples that are near-duplicates of other synthetic samples.

-

Unoriginal: This score measures the novelty of the synthetic data. High values indicate many synthetic samples look like copies of things found in the real dataset, i.e. the synthetic data generator may be memorizing the real data too closely and failing to generalize. Mathematically, this score is computed as the proportion of the synthetic samples that are near-duplicates of examples from the real dataset.

Specific examples of these are scores and shortcomings they reveal are presented further down in this article. You can compute these scores for your own synthetic image/text/tabular data (and understand their mathematical details) by following this tutorial. Computable via a single Python method, these four quantitative scores help you rigorously compare different synthetic data generators (i.e. prompt templates), especially when the difference is not immediately clear from manual inspection of samples.

Snacks Dataset

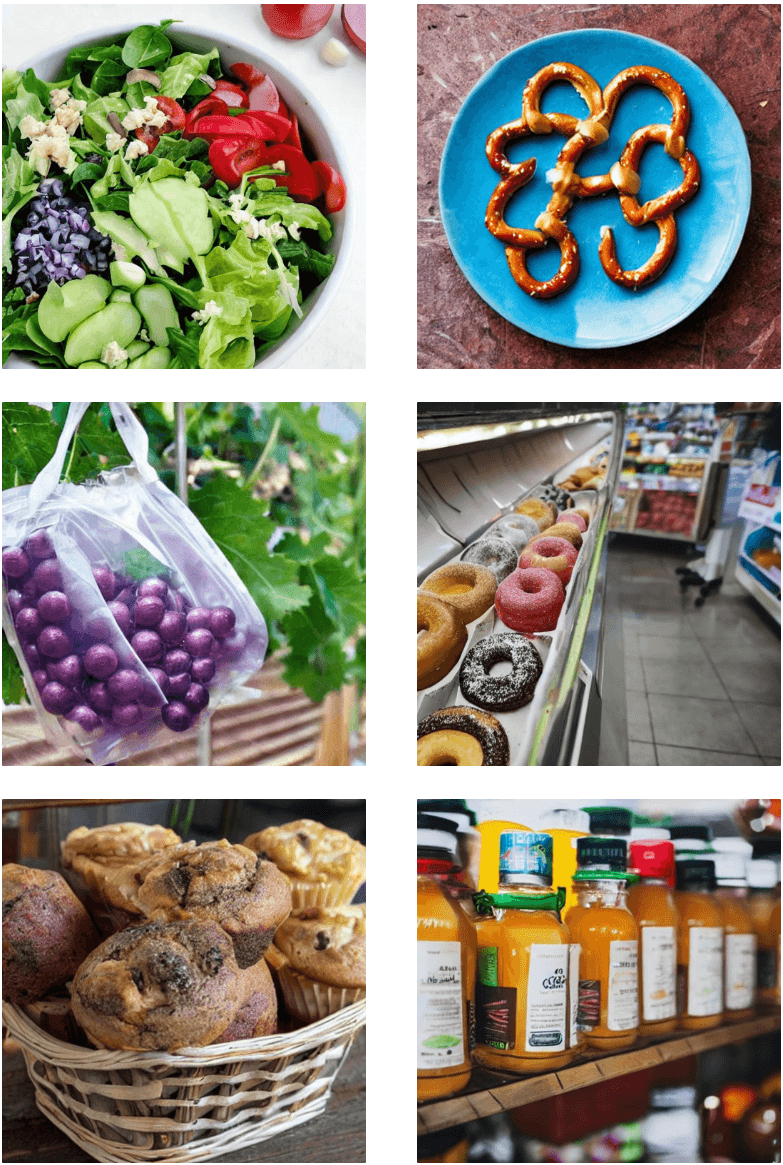

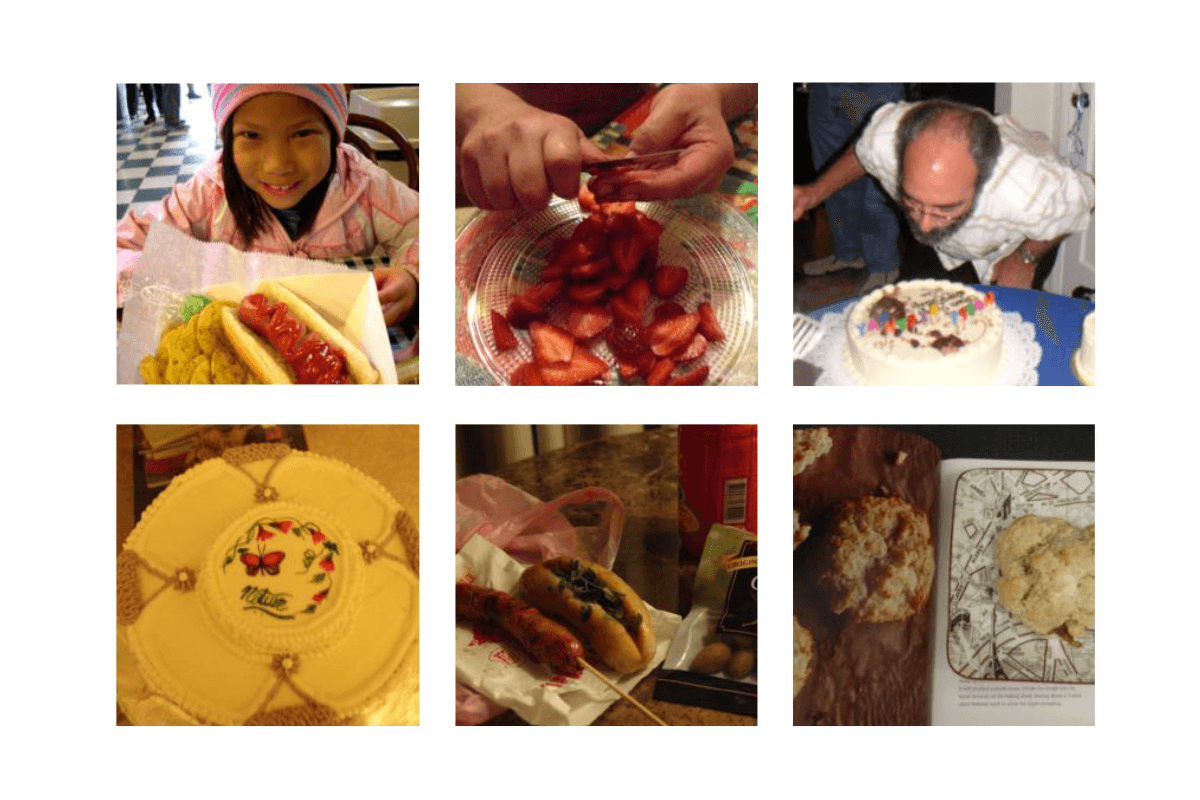

For this article, our efforts in synthetic image generation are anchored on the Snacks dataset. This dataset comprises 4838 actual photographs of 20 different classes of food items.

This dataset depicts a broad range of snack types (ranging from fruits to beverages) and has rich depth (each class of food is depicted in several ways with varied appearances/contexts). Our goal here is to generate a synthetic dataset that can be used either: in place of the real Snacks dataset, or to augment the original data with additional synthetic images. As we will see, it is nontrivial to properly capture the richness of these images (even in this limited Snacks domain).

Generating Images from Prompts

Stable Diffusion is a stochastic text-to-image model that can generate different images sampled from the same text prompt. Engineering a good prompt for Stable Diffusion to generate a synthetic image dataset requires considering several characteristics of real images we are trying to imitate.

Format

The first thing to think about is the desired format of the output. This involves both the medium and the style of the image, which influences the overall look and feel of the generated images. Given that all images in our Snacks dataset are photographs of snacks, it’s important to keep this photographic style in our generated images. We can do this by incorporating a directive such as “A photo of …” in our prompts.

We start our experiment by generating a large batch of 992 images using a basic prompt. For our dataset of snack images, it’s as simple as:

This prompt is straightforward and yields visually coherent images. However, the images tend to be generic and often lack clear definition of the snack types.

To quantitatively evaluate our synthetic image data generated from this prompt (compared against images from the real Snacks dataset), we use a concise method to compute synthetic dataset issue scores available in Cleanlab Studio’s Python API:

The resulting scores (higher values are worse) for the synthetic image dataset generated from the above prompt are:

| Experiment | Unrepresentative | Unrealistic | Unvaried | Unoriginal |

|---|---|---|---|---|

| Baseline prompt | 0.98223 | 0.95918 | 0.0 | 0.0 |

The scores reveal insights about the synthetic dataset generated by our prompt:

- A high unrealistic score indicates that there’s a noticeable difference between the synthetic and real images (see below).

- The high unrepresentative score suggests that the synthetic images haven’t captured the variety and nuances of the Snacks dataset.

- The zero unvaried and unoriginal scores indicate that our generated images aren’t repetitive and appear sufficiently novel compared against the real data. This makes sense given Stable Diffusion is highly nondeterministic and has not been explicitly fine-tuned on our Snacks dataset here.

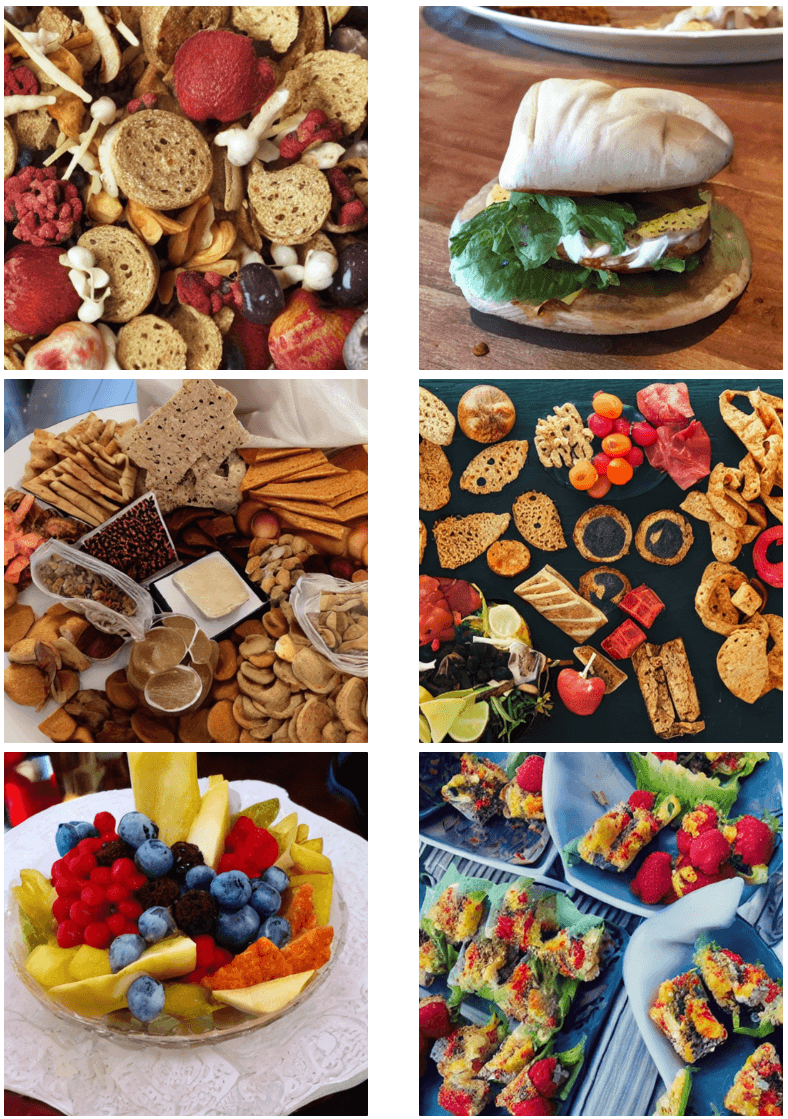

Overall the images generated from this single prompt show little variation and the type of snack depicted is often unclear. It’s worth noting that the image generation process with Stable Diffusion doesn’t provide direct information about the types of snacks it aimed to generate. A rich and representative synthetic dataset is unlikely to be obtained from a single prompt template like this.

Subject

The subject of the image is the next aspect to consider in our prompt-engineering strategy. We can make our prompt more specific by incorporating information about the desired class. This gives us more control over the content of the images we generate, aiming to align the synthetic dataset with the distribution of classes in the original dataset.

For our Snacks dataset, the subject directly relates to the particular kind of snack shown in each image.

By including the subject this way, each prompt becomes more targeted. Instead of the generic “A photo of a snack”, we now use definitive prompts like “A photo of a banana” or “A photo of an apple”. By generating 96 images for each class with these class-specific prompts, we aim to create images that more accurately represent the respective classes found in the Snacks dataset. For this method to work well, the class names should be clear, specific, and consistent. If they’re ambiguous or vague, the images generated might not match the intended subject accurately.

To evaluate synthetic data generated from our class-based prompting, we again employ the automated quality scoring method:

The synthetic dataset produced from our new experiment yields the following scores:

| Experiment | Unrepresentative | Unrealistic | Unvaried | Unoriginal |

|---|---|---|---|---|

| Subject-focused prompt | 0.99674 | 0.95775 | 0.0261 | 0.0 |

- The marginal decrease in the unrealistic score suggests that specific synthetic images, driven by class-focused prompts, are now closely mirroring real images of the same type and overall appear more realistic.

- Despite the prompts focusing on classes, the increase in unrepresentative score indicates that the collection of synthetic images resulting from the prompt “A photo of a …” do not fully capture the broader variety in the Snacks dataset.

- An increase in the unvaried score suggests that a few synthetic images may be nearly identical versions of each other (which is not unexpected since our new prompt is less open-ended). This is a danger of making prompts more specific, the resulting synthetic data will be less diverse!

- The unoriginal score remains low, indicating none of our synthetic samples seems directly copied from the real data (expected since we never trained our Stable Diffusion generator on the real data in this article).

Context

Another thing we can use to improve our prompts is context. It refers to the background or surrounding situation in which the subject is placed. With Stable Diffusion, the model largely determines the background or setting of the generated image. But by adding a ‘context rule’ to our previous class prompts, we can try to influence this part of the image.

Our augmented prompt would look like:

In the case of our Snacks dataset, we made heuristic selections of conceivable scenarios or contexts where snacks might typically appear. For practical applications, it’s advisable to select contexts methodically, grounded in an analysis of the existing data (eg. relative frequency of different backgrounds).

We generated a synthetic dataset of 4800 images from different class + context combinations. To gauge the effectiveness of our context-enhanced prompts, we once again use Cleanlab’s automated quality scoring:

The scores for this iteration of our synthetic dataset are:

| Experiment | Unrepresentative | Unrealistic | Unvaried | Unoriginal |

|---|---|---|---|---|

| Contextualized prompt | 0.99931 | 0.99604 | 0.0117 | 0.0 |

Analyzing these results:

- A further increase in the unrepresentative score emphasizes that while context-driven prompts offer a new angle, it’s unreasonable to expect them to capture the full range of possible images on their own.

- The increase of the unrealistic score suggests that certain synthetic images, when bound by our context rules, appear less natural, making them more distinguishable from real images. Not every context rule will be suitable for every class; envisioning “juice on a plate” or “ice cream in a basket” might produce nonsensical images that are inconsistent with reality.

Stable Diffusion excels at image generation, but capturing the real world’s full diversity remains a challenge. While our baseline dataset was diverse, it faltered in supervised learning contexts. Class prompts, on the other hand, improved the realism but left many real images underrepresented. Surprisingly, specific context prompts exacerbated this underrepresentation. Our takeaway? Class prompts are our best bet for richer synthetic datasets. To truly excel and fill those gaps, refining our context prompts is the way forward.

Across all prompts, the unrealistic and unrepresentative scores were pretty high. Stable Diffusion tends to craft polished, idealized depictions of the subject in the prompt. This contrasts with the real dataset’s spontaneous and varied nature, encapsulating various lighting conditions, intricate backgrounds, and ambiguous contexts that we don’t really observe within the synthetic images.

Can your prompts inspire a thousand unique images?

Prompt engineering is nowadays key to effective synthetic image generation. This article demonstrates one approach to generate a diverse and realistic synthetic image dataset using the Stable Diffusion model and the Snacks dataset. These strategies can be applied in many other settings as well. The art of devising effective prompts is a delicate balance — spelling out too much detail might lead to overly specific images while remaining too vague could yield a lack of diversity.

The quantitative assessment of synthetic data quality from Cleanlab Studio aids significantly in this iterative process. By automating the evaluation phase, it enables you to focus more on refining your prompts and improving your synthetic images (rather than struggling to understand if you’re making progress or not).

Resources

-

Cleanlab Studio - Use AI to systematically assess your own synthetic data.

-

Tutorial Notebook - Learn how to compute synthetic data quality scores for any dataset.

-

Blogpost with Text Data - See how to similarly assess synthetic data generated by LLMs.

-

Slack Community - Discuss ways to use and evaluate synthetic data in this changing field.

-

Advanced AI Solutions - Interested in building AI Assistants connected to your company’s data sources or exploring other Retrieval-Augmented Generation applications? Reach out to learn how Cleanlab can help.