For over half a decade, we’ve been increasing the coverage of ML tasks that cleanlab supports, in response to user requests. Today we are proud to share that cleanlab directly supports improving the reliability/accuracy of datasets and models for all major ML tasks. We just released v2.5 of the package, which adds support for regression— yes, cleanlab supports data with real-valued targets now, not just class labels! (nod to the dozens of users who have requested that feature over the years). With v2.5, cleanlab is now the only package to support label error detection for both object detection and image segmentation as well, enabling users to find issues in their datasets for every major ML task across most data types and modalities.

It’s been a long time coming, and we hope you have some fun with v2.5!

All features of cleanlab work with any dataset and any model. Here’s a summary of what cleanlab can do:

- Detect data issues (outliers, duplicates, label errors, etc) w/ DataLab

- Train robust models w/ CleanLearning

- Out-of-the-box support for PyTorch, Tensorflow, Keras, JAX, HuggingFace, OpenAI, XGBoost, and any scikit-learn compatible model

- Infer consensus + annotator-quality for multi-annotator data w/ CrowdLab

- Suggest data to (re)label next (active learning) w/ ActiveLab

ML Tasks Supported

- Regression (NEW)

- Object detection (NEW)

- Image segmentation (NEW)

- Outlier detection

- Binary, multi-class, and multi-label classification

- Token classification

- Classification with data labeled by multiple annotators

- Active learning with multiple annotators

What’s New in v2.5

Regression

Although it’s brand new, Cleanlab’s regression functionality has already been used to successfully improve data quality in Kaggle competitions.

Specifically, a new regression module has been introduced for label error detection in regression datasets like the one below.

Learn how to apply it to your own regression data within 5 minutes via the Find Noisy Labels in Regression Datasets quickstart tutorial. Here’s all the code needed to detect erroneous values in a regression dataset and fit a more robust regression model with the corrupted data automatically filtered out:

Object Detection

The new object_detection module can automatically detect annotation errors in object detection datasets. This includes images with: incorrect class labels annotated for bounding boxes, objects entirely overlooked by annotators, as well as poorly-drawn bounding boxes which do not optimally localize an object.

Learn how to apply this to your own object detection data within 5 minutes via the Finding Label Errors in Object Detection Datasets quickstart tutorial. Here’s all the code needed to detect errors in an object detection dataset or score images by their labeling quality:

Image Segmentation

Cleanlab now also supports image segmentation tasks in just a few lines of code via the new semantic_segmentation module. The package can automatically detect many forms of annotator error which is common in such datasets because they require laborious labeling of each individual pixel.

Learn how to apply this to your own object detection data within 5 minutes via the Find Label Errors in Semantic Segmentation Datasets quickstart tutorial. Here’s all the code needed to detect errors in a segmentation dataset or score images by their labeling quality:

Proper support for Data-Centric AI in regression, image segmentation, and object detection tasks required significant research and novel algorithms developed by our scientists. We have published papers on these for transparency and scientific rigor. Check them out to learn the math behind these new methods and see comphrensive benchmarks showing how effective they are on real datasets. Or stay tuned for upcoming blogposts summarizing all of the research behind these new methods!

Other Major Improvements in v2.5

- Datalab Enhancements: Datalab is a general platform for detecting all sorts of common issues in real-world data, and the best place to get started for running this package on your datasets. Through the integration of CleanVision, Datalab can now also detect low-quality images (see above) such as those which are: blurry, over/under-exposed, low information, oddly-sized, etc.

- Imbalanced and Unlabeled Data: Datalab can now also flag rare classes in imbalanced classification datasets and even audit unlabeled datasets.

- Out-of-Distribution Detection: Added GEN algorithm which can detect out-of-distribution data based on

pred_probsmore effectively in datasets with tons of classes. - Speed and Efficiency: A 50x speedup has been achieved in the

cleanlab.multiannotatorcode to enable quicker analysis of data labeled by multiple annotators. The memory requirements for methods across the package have been greatly reduced via algorithmic tricks as well.

Many additional improvements were made in this update, check out the release notes for a complete list.

Easy mode: Cleanlab Studio

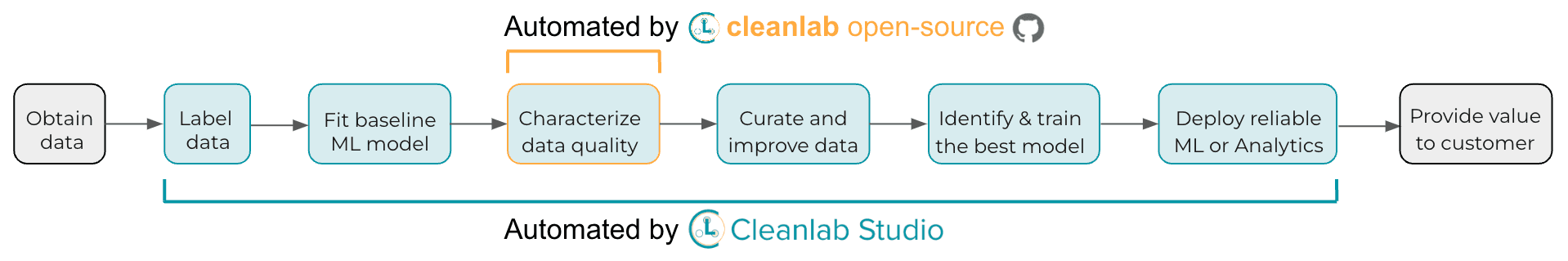

While our open-source library finds issues in your dataset via your own ML model, its utility depends on you having a proper ML model and efficient interface to fix these data issues. Providing all of these pieces, Cleanlab Studio is a no-code platform to find and fix problems in real-world datasets. Beyond data curation & correction, Cleanlab Studio additionally automates almost all of the hard parts of turning raw data into reliable ML or Analytics:

- Labeling (and re-labeling) data

- Training a baseline ML model

- Diagnosing and correcting data issues via an efficient interface

- Identifying the best ML model for your data and training it

- Deploying the model to serve predictions in a business application

All of these steps are easily completed with a few clicks in the Cleanlab Studio application for text, tabular and image datasets (depicted below). Behind the scenes is an AI system that automates all of these steps wherever it confidently can.

A Big Thank You to Contributors

Transforming cleanlab into the most popular data-centric AI package today has been no small feat. A heartfelt appreciation goes out to all contributors. Your relentless hard work and dedication are what makes Cleanlab shine, and directly helps the thousands of data/ML scientists using this package.

An especially big thank you to those who made their first code contributions in this release: Vedang Lad, Gordon Lim, Angela Liu, Chenhe Gu, Steven Yiran, Tata Ganesh (who also contributed to our blog).

If you feel there’s another ML task that Cleanlab should support or have other suggestions, your feedback is invaluable. We are always looking for more code contributors to help us build the future of open-source Data-Centric AI, you can easily get started via our contributing guide!

Next Steps

Quickly improve your own Data/AI with Cleanlab

- GitHub

- Cleanlab Studio (no-code, automatic platform, easy-mode)

Join our community of data-centric scientists and engineers

Resources to learn more

Let’s make AI more data-centric with Cleanlab v2.5 and beyond!