Visual data is becomingly increasingly important to the success of modern businesses. But heterogenous image collections require significant labor for content moderation and ensuring high-quality images. Such data curation is critical to a good user experience, and also for training Generative AI like Large Visual Models (LVM) or Diffusion networks.

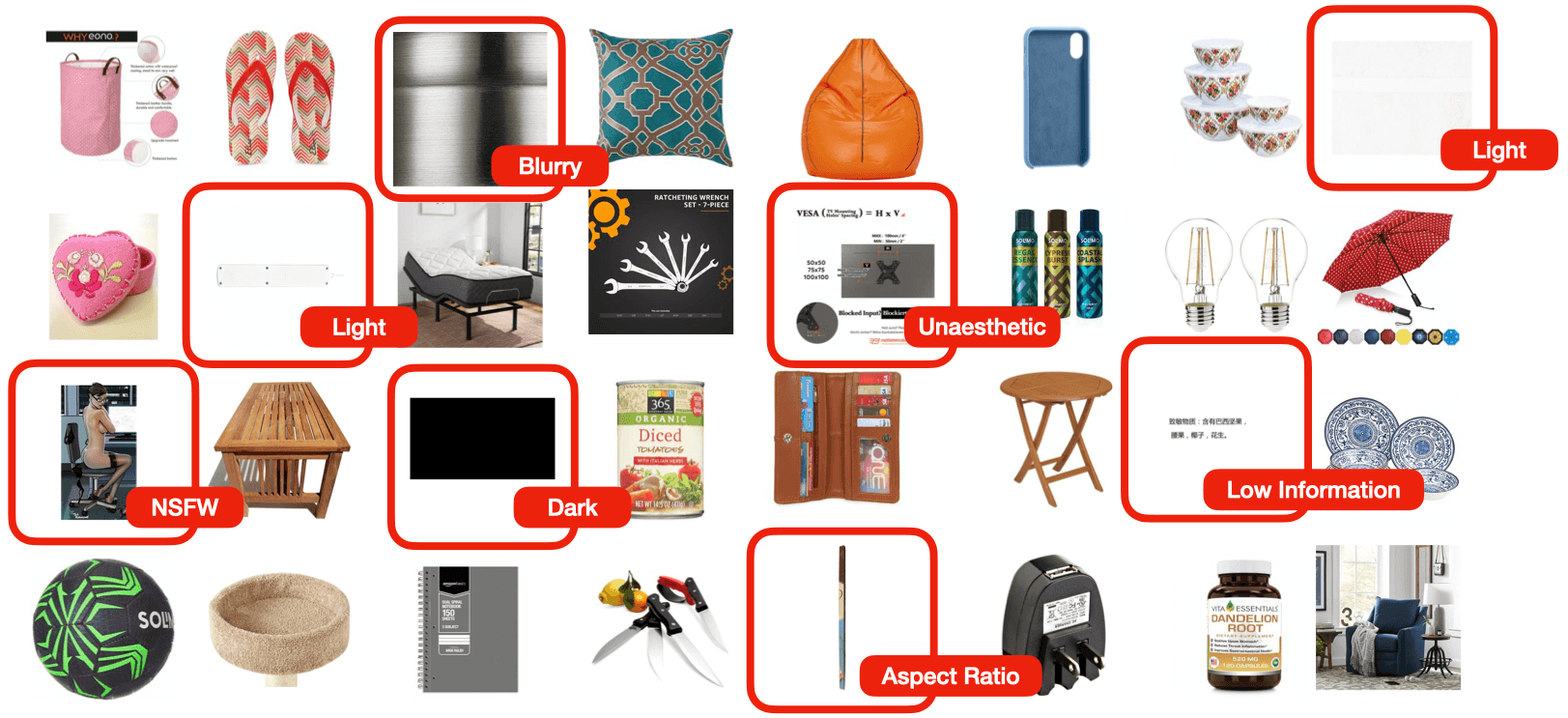

Introducing: an automated solution to detect images that are unsafe (Not-Safe-For-Work) or low-quality (over/under-exposed, blurry, oddly-sized/distorted, low-information, and otherwise unaesthetic). Below we highlight some issues detected in an e-commerce product catalog.

Cleanlab Studio is a no-code platform that uses cutting-edge AI to auto-detect the aforementioned types of problematic images lurking in any dataset. The same AI also auto-detects other issues like images which are (near) duplicates, outliers, or miscategorize/tagged in a labeled dataset. Companies across many industries use this Data-Centric AI platform to improve product/content catalogs, photo galleries, social media, and training data for Machine Learning & Generative AI. This article showcases one particular application: providing automated quality assurance for an e-commerce product catalog.

Applying Cleanlab Studio to a Product Catalog

Images are core to the browsing experience of any product (or content) catalog, providing a key resource to guide purchase decisions. Let’s see how easy it is to catch the low-quality images lurking in a version of the Amazon Berkeley Objects dataset, which contains product images and corresponding product categories. Analyzing this dataset with Cleanlab Studio takes a couple clicks and is accomplished without writing a single line of code – the AI is doing all the hard work! The following sections outline problematic product images discovered in this e-commerce dataset (such as those pictured above). We emphasize these were all detected automatically, without us even specifying the types of potential problems to look for in this dataset or other manual configuration.

Dark Images

Dark images lack detail/clarity and are often under-exposed photos. A good e-commerce platform should not display products whose image is too dark. Cleanlab Studio automatically detected hundreds of dark images in this dataset, shown below sorted in order of severity (which Cleanlab also automatically quantifies).

Many of the images flagged in the dataset are entirely black images that don’t shed any additional light on the corresponding product beyond its given category label (LAMP, PLANTER, KEYBOARDS, …). Beyond harming the customer experience, such images are also problematic for machine learning (especially if they introduce spurious correlations in a dataset). The numeric Dark Score associated with each image quantifies the severity of this issue. You can apply a different threshold to these scores based on your specific requirements.

Light Images

Photos can be conversely over-exposed or excessively bright / washed out. Cleanlab Studio automatically detected many too light images in this product catalog. Product details are obscured and these light images have similar downsides as the dark images. Again a Light Score is provided to indicate the severity of this issue in each image.

Blurry Images

Cleanlab Studio also auto-detects another type of low-quality image: those which are blurry. A few such images were also flagged in this product catalog (shown below sorted by their reported Blurry Score). Product details are again obscured, making these images less useful to customers. Most of these blurry images belong to products from the LIGHT FIXTURE category. This could introduce spurious correlations in machine learning tasks related to product categories and images.

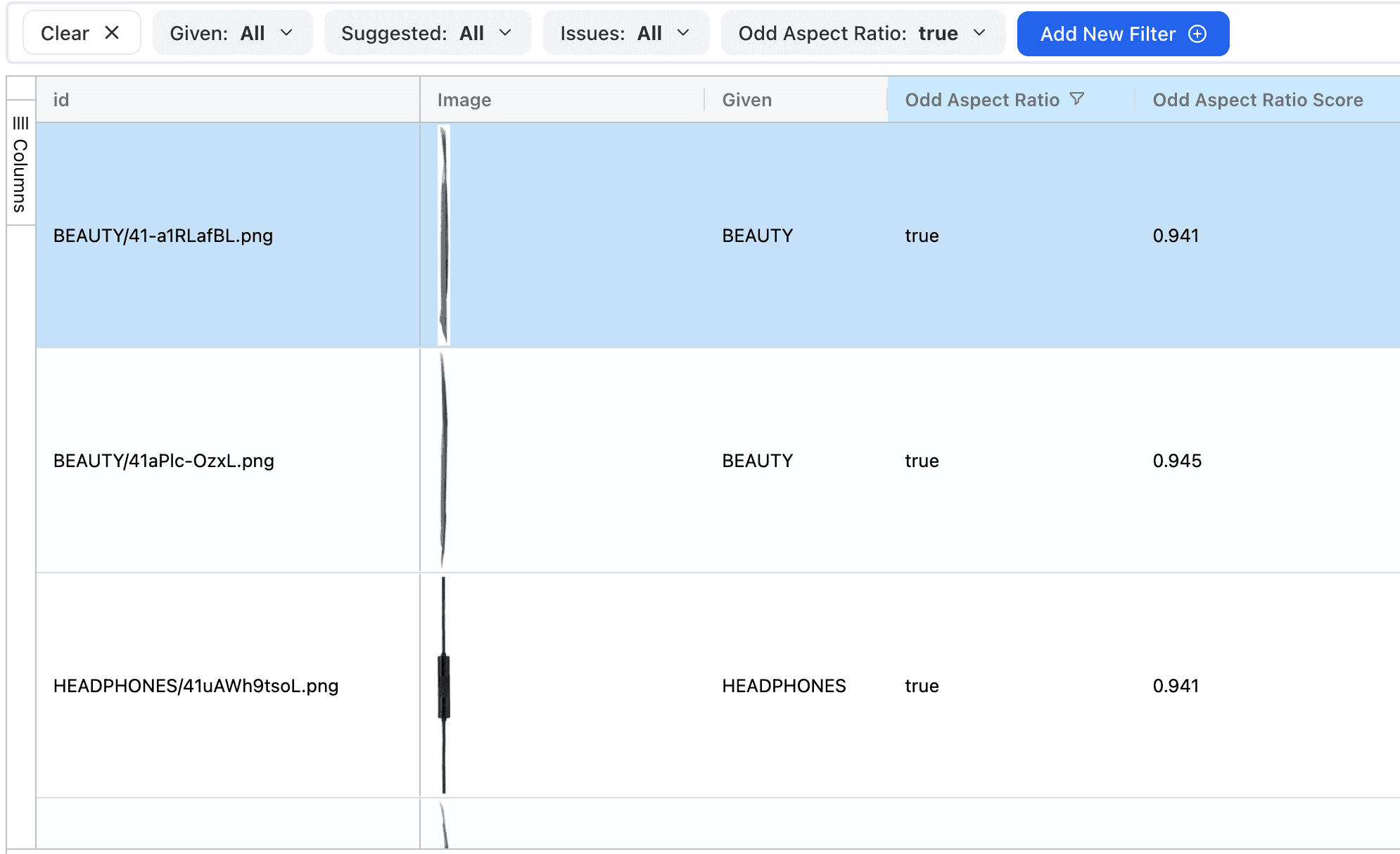

Odd Aspect Ratio Images

Images with unusual dimensions can disrupt the uniformity of product displays. Detecting and resizing these images can enhance the visual consistency of a catalog. In this dataset, Cleanlab Studio detected a few images with odd aspect ratio relative to the rest of the images. In this case, their dimensions may be attributed to the depicted object’s shape (but oddly sized images can be more problematic in other applications).

Low Information Images

Cleanlab Studio also auto-detects low information images that lack content. Stated mathematically, their pixel values have low entropy. As seen below, most of these images flagged in this product catalog are uninformative and unengaging.

Grayscale Images

In this dataset, Cleanlab Studio automatically flagged many grayscale images. Whether this lack of color is problematic in a visual dataset will depend on the application. But it’s certainly a concerning source of spurious correlations in many computer vision tasks.

Aesthetic Images

Images that are visually appealing lead to higher user engagement. Such aesthetic images might be artistic, beautiful photographs, or contain otherwise interesting/vibrant content. Cleanlab Studio computes a Aesthetic Score for each image to estimate its subjective appeal to most humans. Higher scores indicate more visually appealing images (no default threshold is provided since this is inherently subjective). For training Diffusion and other Generative AI models, the best results are achieved by retaining only the most aesthetic images in the training dataset.

Here are the images in this product catalog that Cleanlab Studio identified as most aesthetic:

Here are the images that Cleanlab Studio identified as least aesthetic in this dataset:

All of the least aesthetic images correspond to products from the SHOES category, whose images seem to have gotten distorted. Customers will not be delighted by a product catalog that looks like this.

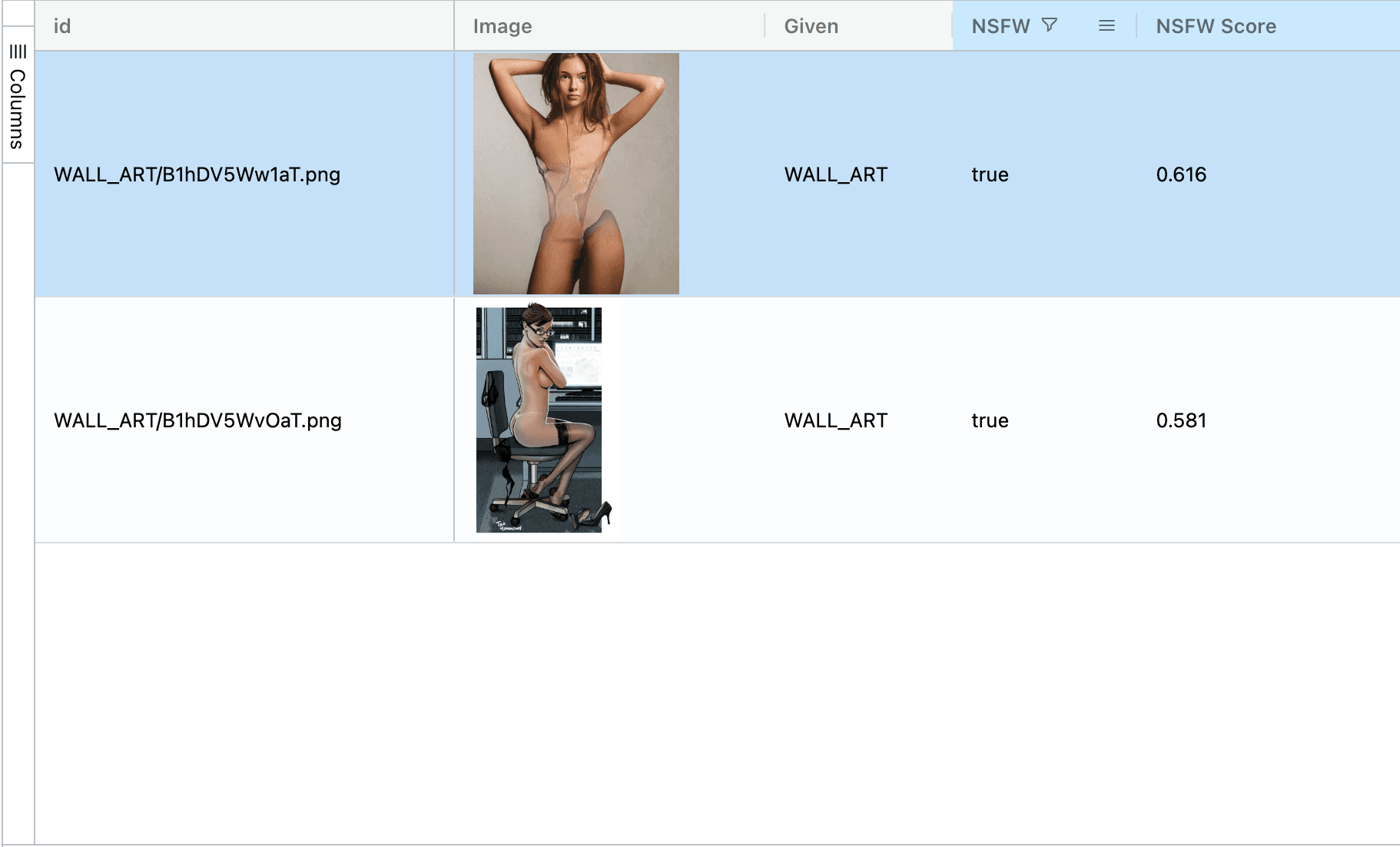

NSFW Images

In e-commerce platforms with third party sellers and other platforms with user-uploaded images, content moderation is critical to ensure the safety of your community. Luckily this dataset has already been mostly vetted. Cleanlab Studio only auto-detected a couple Not Safe For Work (NSFW) images in this product catalog, corresponding to products in the WALL ART category. Like the other issues Cleanlab can flag, a numeric NSFW score is provided to quantify the severity of NSFW, which you can threshold based on your requirements. NSFW images contain visual content not suitable for viewing in a professional or public environment, due to its explicit or graphic nature (nudity, gore, …).

The analyses presented thus far have related to individual images. Cleanlab Studio also detects dataset issues where the AI must relate multiple pieces of information. We showcase these types of issues next.

Near Duplicate Images

Cleanlab Studio automatically detected nearly (and exactly) duplicated images present in this product catalog. A good product catalog should be devoid of duplicate SKUs as well as SKUs that are hard to distinguish. You can tell if an image is exactly vs. nearly duplicated based on its Duplicate Score, which will equal 1.0 if exact replicas exist in the dataset.

Miscategorized Images

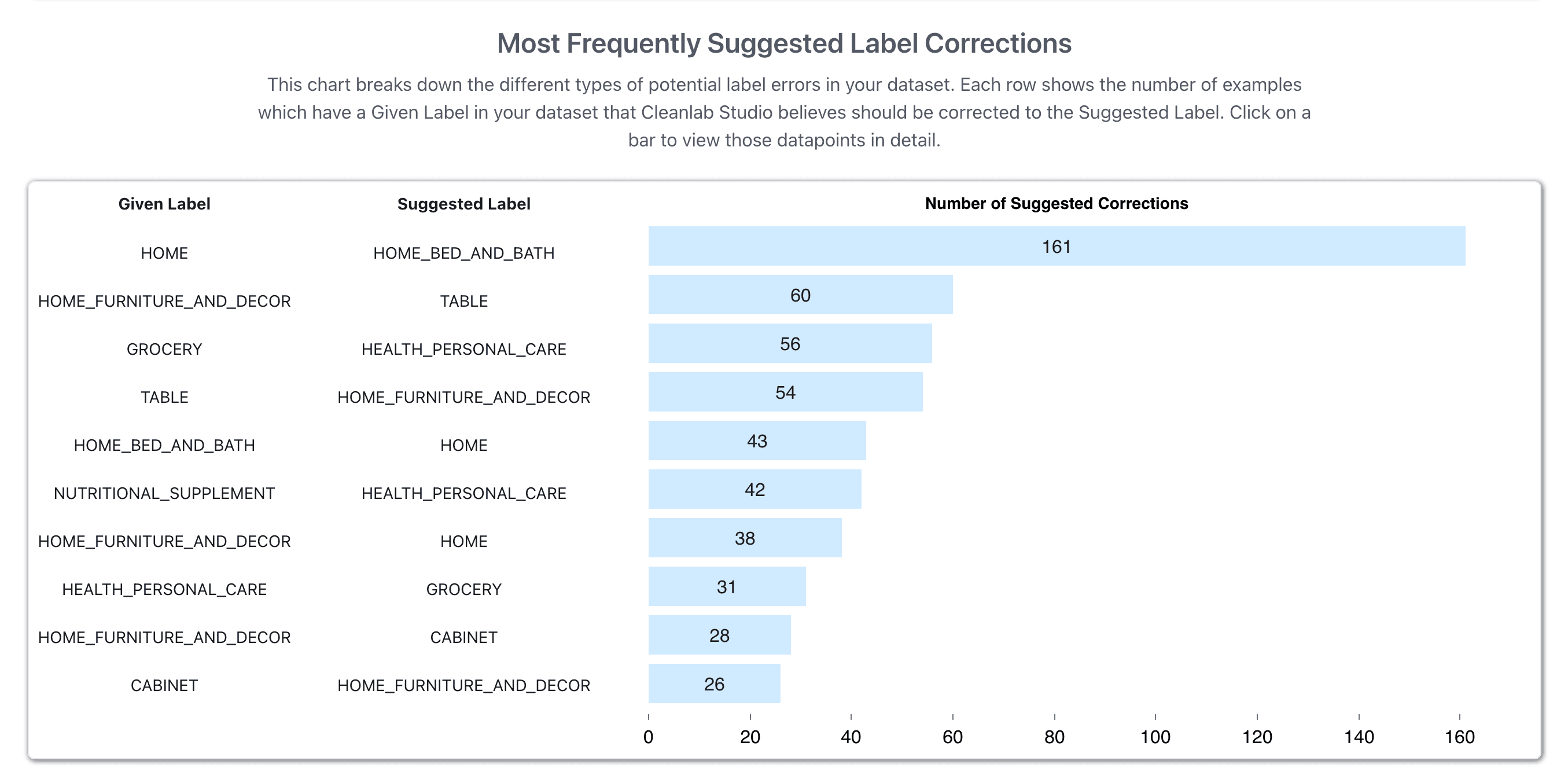

Products assigned to the wrong category, or whose image does not reflect their category and product type, negatively impact the search experience and recommendations. Cleanlab Studio further automates the detection of miscategorize (i.e. mislabeled) products in the catalog. The AI system detects such label issues by predicting the category based on the image and applying Confident Learning. Below we see that: many of the product images whose Given Label Cleanlab Studio estimates to be incorrect stem from overlapping categories (e.g. VEGETABLE vs. GROCERY). This analysis can reveal shortcomings in a product catalog’s ontology and category hierarchy.

For an dataset-level summary of labeling errors, Cleanlab Studio provides Analytics. These help you understand which types of labeling issues are most common overall. For this product catalog, the image below shows these mostly tend to be overlapping product categories. For instance, there are 161 images in this dataset that belong to the product category HOME, which Cleanlab Studio suggests should belong to another existing product category in the dataset: HOME_BED_AND_BATH. Maybe having both of these categories isn’t a great idea?

The following video demonstrates how to review miscategorized products within a specific category, by using a Filter. Here we focus on the CAMERA_TRIPOD category, revealing errors such as memory cards mistakenly categorized as tripods.

Outliers

Cleanlab Studio also detects outliers (aka. anomalies) within any dataset. Below, we see that the outliers detected in this product catalog tend to be text-heavy graphics that don’t resemble product photos. We can focus on outliers within a specific product category like CAMERA_TRIPOD by again using a Filter. Outliers typically correspond to images that either don’t neatly fit into any of the existing categories or are very different from the rest of the dataset.

Conclusion

Most businesses understand the importance of image quality in product/content catalogs. But because it is so hard to catch, content that is inappropriate, incorrect, or low-quality inevitably creeps in. Cleanlab Studio provides automated quality assurance for your visual data. Now you can easily detect images that are: not safe for work, unaesthetic, light/dark/blurry, low information, oddly sized, outliers, nearly diplicated, miscategorize/tagged, or exhibiting many other types of common issues. Detecting all of the problems showcased in this article merely took a few minutes of our time to load the dataset and click around, zero manual configuration or coding was required!

After detecting problematic content with Cleanlab Studio, e-commerce platforms with third-party sellers and other platforms with user-uploaded images can alert the originator of these issues. By swiftly resolving such alerts, your platform can elevate customer engagement, satisfaction, and conversion rates. This strategic data-centric AI investment will give you a competitive edge in today’s online retail landscape.